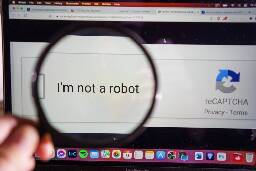

Forget security – Google's reCAPTCHA v2 is exploiting users for profit | Web puzzles don't protect against bots, but humans have spent 819 million unpaid hours solving them

Research Findings:

- reCAPTCHA v2 is not effective in preventing bots and fraud, despite its intended purpose

- reCAPTCHA v2 can be defeated by bots 70-100% of the time

- reCAPTCHA v3, the latest version, is also vulnerable to attacks and has been beaten 97% of the time

- reCAPTCHA interactions impose a significant cost on users, with an estimated 819 million hours of human time spent on reCAPTCHA over 13 years, which corresponds to at least $6.1 billion USD in wages

- Google has potentially profited $888 billion from cookies [created by reCAPTCHA sessions] and $8.75–32.3 billion per each sale of their total labeled data set

- Google should bear the cost of detecting bots, rather than shifting it to users

"The conclusion can be extended that the true purpose of reCAPTCHA v2 is a free image-labeling labor and tracking cookie farm for advertising and data profit masquerading as a security service," the paper declares.

In a statement provided to The Register after this story was filed, a Google spokesperson said: "reCAPTCHA user data is not used for any other purpose than to improve the reCAPTCHA service, which the terms of service make clear. Further, a majority of our user base have moved to reCAPTCHA v3, which improves fraud detection with invisible scoring. Even if a site were still on the previous generation of the product, reCAPTCHA v2 visual challenge images are all pre-labeled and user input plays no role in image labeling."

There are much better ways of rate limiting that don't steal labor from people.

hCaptcha, Microsoft CAPTCHA all do the same. Can you give example of some that can't easily be overcome just by better compute hardware?

The problem is the unethical use of software that does not do what it claims and instead uses end users for free labor. The solution is not to use it. For rate limiting a proxy/load-balancer like HAProxy will accomplish the task easily. Ex:

https://www.haproxy.com/blog/haproxy-forwards-over-2-million-http-requests-per-second-on-a-single-aws-arm-instance

https://www.haproxy.com/blog/four-examples-of-haproxy-rate-limiting

And what will you do if a person in a CGNAT is DoSing/scraping your site while you want others to access? IP based limiting isn't very useful, both ways.

HAProxy also has stick tables, pretty beefy ACLs, Lua support, and support for calling external programs. With the first two one can do pretty decent, IP, behavior, and header based throttling, blocking or tarpitting. Add in Lua and external program support and you can do some pretty advanced and high-performance bot detection in your language of choice. All in the FOSS version, which also includes active backend health checks.

It's really a pretty awesome LB/Proxy.