GNOME June 2024: C'mon you can do better

It depends on what you're self-hosting and If you want / need it exposed to the Internet or not. When it comes to software the hype is currently setup a minimal Linux box (old computer, NAS, Raspberry Pi) and then install everything using Docker containers. I don't like this Docker trend because it 1) leads you towards a dependence on property repositories and 2) robs you from the experience of learning Linux (more here) but I it does lower the bar to newcomers and let's you setup something really fast. In my opinion you should be very skeptical about everything that is "sold to the masses", just go with a simple Debian system (command line only) SSH into it and install what you really need, take your time to learn Linux and whatnot.

Strictly speaking about security: if we're talking about LAN only things are easy and you don't have much to worry about as everything will be inside your network thus protected by your router's NAT/Firewall.

For internet facing services your basic requirements are:

Quick setup guide and checklist:

Realistically speaking if you're doing this just for a few friends why not require them to access the server through WireGuard VPN? This will reduce the risk a LOT and won't probably impact the performance. Here a decent setup guide and you might use this GUI to add/remove clients easily.

Don't be afraid to expose the Wireguard port because if someone tried to connect and they don't authenticate with the right key the server will silently drop the packets.

Now if your ISP doesn't provide you with a public IP / port forwarding abilities you may want to read this in order to find why you should avoid Cloudflare tunnels and how to setup and alternative / more private solution.

Oh well, If you think you're good with Docker go ahead use it, it does work but has its own dark side...

cause its like a micro Linux you can reliably bring up and take down on demand

If that's what you're looking for maybe a look Incus/LXD/LXC or systemd-nspawn will be interesting for you.

I hope the rest can help you have a more secure setup. :)

Another thing that you can consider is: instead of exposing your services directly to the internet use a VPS a tunnel / reverse proxy for your local services. This way only the VPS IP will be exposed to the public (and will be a static and stable IP) and nobody can access the services directly.

client ---> VPS ---> local server

The TL;DR is installing a Wireguard "server" on the VPS and then have your local server connect to it. Then set something like nginx on the VPS to accept traffic on port 80/443 and forward to whatever you've running on the home server through the tunnel.

I personally don't think there's much risk with exposing your home IP as part of your self hosting but some people do. It also depends on what protection your ISP may offer and how likely do you think a DDoS attack is. If you ISP provides you with a dynamic IP it may not even matter as a simple router reboot should give you a new IP.

That looks like a DDoS, for instance that doesn't ever happen on my ISP as they have some kind of DDoS protection running akin to what we would see on a decent cloud provider. Not sure of what tech they're using, but there's for certainly some kind of rate limiting there.

- Isolate the server from your main network as much as possible. If possible have then on a different public IP either using a VLAN or better yet with an entire physical network just for that - avoids VLAN hopping attacks and DDoS attacks to the server that will also take your internet down;

In my case I can simply have a bridged setup where my Internet router get's one public IP and the exposed services get another / different public IP. If there's ever a DDoS, the server might be hammered with request and go down but unless they exhaust my full bandwidth my home network won't be affected.

Another advantage of having a bridged setup with multiple IPs is that when there's a DDoS/bruteforce then your router won't have to process all the requests coming in, they'll get dispatched directly to your server without wasting your router's CPU.

As we can see this thing about exposing IPs depends on very specific implementation detail of your ISP or your setup so... it may or may not be dangerous.

Note: iptables is "deprecated" you should be using nftables. Even Debian is on nftables nowadays.

Sublime Text is much faster to use a quick editor and IntelliJ is much better as a full featured IDE and totally worth the cost. IntelliJ saves me on a ton of time on merge conflicts, it much faster in large projects, code analysis to find unused stuff and issues is better... VSCode can handle merges but it requires extensions and isn't as good / you'll have do to more manual work.

While I don’t totally disagree with you, this has mostly nothing to do with Windows and everything to do with a piece of corporate spyware garbage that some IT Manager decided to install. If tools like that existed for Linux, doing what they do to to the OS, trust me, we would be seeing kernel panics as well.

It's called: vendor lock-in.

Dear open source app user: feel free to improve the README file of the projects you come across by adding a few screenshots you believe are relevant.

"if you can't parse tabs as whitespace, you should not be parsing the kernel Kconfig files." ~ Linus Torvalds

This is what we got after people sent him into PC training. The OG Linus would say something like "if you're a piece of s* that can't get over your a** to parse tabs as whitespace you should be ashamed to walk on this planet let alone parsing the kernel Kconfig files. What a f* waste of space."

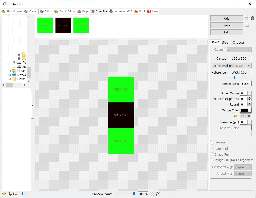

No, no, this is the peak OS installation menu:

😜

Although I understand the OP's perspective open-source is a community effort and people should have a more proactive attitude and contribute when they feel things aren't okay. Most open-source developers aren't focused / don't have time for how things look (or at least not on the beginning). If you're a regular user and you can spend an hour taking a bunch of screenshots and improving a readme you'll be making more for the future the project that you might think.

Although we’ll be hosting the repository on GitHub

Why aren't they using a self-hosted instance of Gitea? This makes no sense move to Github of all places.

One Common Linux Myth You Should Stop Believing: there's a FOSS alternative to every single proprietary software out there that can be used as a replacement in all and every use case.

Transmission is good precisely because it does one thing and one thing really well - download torrents. No other crap, spam and non-related garbage required.

So the Germany has been moving back and forth between Microsoft and Linux / open-source.

When Munich decided to ditch many of its Windows installations in favor of Linux in 2003, it was considered a groundbreaking moment for open source software -- it was proof that Linux could be used for large-scale government work. However, it looks like that dream didn't quite pan out as expected. The German city has cleared a plan to put Windows 10 on roughly 29,000 city council PCs starting in 2020. There will also be a pilot where Munich runs Office 2016 in virtual machines. The plan was prompted by gripes about both the complexity of the current setup and compatibility headaches.

Do you know what this smells like? Corruption and consulting companies with friends in the govt looking for ways to profit.

What else can be more profitable for a consulting company than shifting the entire IT of a city or a country between two largely incompatible solutions? :)

Yes, I love it and don't get me wrong but there are many downsides and they all result from poor planning and/or bad decisions around how flatpak was built. Here are a few:

Flatpak acts as a restrictive sandbox experience that is mostly about "let's block things and we don't care about anything else". I don't think it's reasonable to have situations like applications that aren't picking the system theme / font without the user doing a bunch of links or installing more copies of whatever you already have. Flatpak in general was a good ideia, but the system integration execution is a shame.

printer, colour laserjet, that is from another ‘region’,

What the fuck HP. I've been using cheap cartridges from Aliexpress without issues.

You never tried to listen for stock Firefox's traffic with Wireshark for sure.

People speak very good thing about Firefox but they like to hide and avoid the shady stuff. Let me give you the un-cesored version of what Firefox really is. Firefox is better than most, no double there, but at the same time they do have some shady finances and they also do stuff like adding unique IDs to each installation.

Firefox does is a LOT of calling home. Just fire Wireshark alongside it and see how much calling home and even calling 3rd parties it does. From basic ocsp requests to calling Firefox servers and a 3rd party company that does analytics they do it all, even after disabling most stuff in Settings and config like the OP did.

I know other browsers do it as well, except for Ungoogled and because of that I’m sticking with it. I would like to avoid programs that need no snitch whenever I open them. ungoogled-chromium + ublock origin + decentraleyes + clearurls and a few others.

Now you’re free to go ahead and downvote this post as much as you would like. I’m sorry for the trouble and mental break down I may have caused by the sudden realization that Firefox isn’t as good and private after all.

Blockchain and/or smart contracts try to solve problems that were already solved in multiple ways by adding a ton of overhead that makes them unable for large scale deployment and long term usage.

Here's what's stupid about the people who say that blockchain will revolutionize the financial sector: why add a blockchain and all the computing power to store transactions when you can take the obviously efficient route and simply store transactions on a SQL database? Before anyone screams the word "decentralization" do you really think banks will cease to exist? NO. The most likely scenario - if people keep pushing this bullshit - will be to have some kind of closed blockchain that banks use to transact money, so it essentially becomes the same thing we've now with added overhead, environmental impact and technical complexity. We have efficient system in place with safeguards, operations can be tracked, reversed etc.

Frankly it would be a better use of everyone's time, money and effort to simply fix the REAL problems in the banking industry, such as the fact that the US still doesn't have a decently working, standardized digital system to transfer money between account holders in different banks. Europe has this with SWIFT/IBAN and people can transfer money between accounts, banks and countries almost instantly by just providing the amount they want to transfer and an IBAN number (nothing else required). Now tell me, how many people in the US have bank account with IBAN numbers? Most likely only millionaires. The majority of people use a combination of poorly structured system of account and routing numbers that often fail and lead to delays. Oh btw Russia has a similar system to IBAN.

There are tons of other weaknesses in the US banking system around the way credit and debit cards work, for instance why would anyone on their right mind assume that a system where you can provide your credit cards number and CVV/CVC code over a phone to make a transfer wouln't be abused to scam people and steal money? Then, after decades of fraud, to "alleviate" the issue they decided to create a bunch of companies that offer virtual credit cards with limits. Now let this how with works in most European countries: banks will, most likely, refuse any attempt at charging a physical credit card unless its made on a physical payment terminal with the card actually physical inserted on the thing an a 4 digit PIN code typed in. If you want to buy shit over the internet simply open your bank's app or website and they'll have a function to create a single use virtual credit card for the transaction. Way more secure isn't it? :) Either way most European countries also other systems to handle those kinds of payments eg. the online stores provides you with a specific code and you then can go into any ATM or your Bank's App, insert the code and make the payment.

As you can see making the banking system efficient and having fast, secure and usable things isn't about blockchain bullshit, its usually more about common sense and creating standards that companies, such as banks, have to comply with.

Linux in corporation fails in multiple ways, the most prevalent is that people need to collaborate with others that use proprietary software such as MS Office that isn't available for Linux and the alternatives such as LibreOffice aren't just good enough. It all comes down to ROI, the cost of Windows/Office for a company is cheaper than the cost of dealing with the inconsistencies in format conversions, people who don't know how to use the alternative X etc etc. This issue is so common that companies usually also avoid Apple due to the same reason, while on macOS you've a LOT more professional software it is still very painful to deal with the small inconsistencies and whatnot.

Linux desktop is great, I love it, but it gets it even worse than Apple, here some use cases that aren't easy to deal in Linux:

If one lives in a bubble and doesn’t to collaborate with others then native Linux apps might work and might even deliver a decent workflow. Once collaboration with Windows/Mac users is required then it’s game over – the “alternatives” aren’t just up to it.

Windows licenses are cheap and things work out of the box. Software runs fine, all vendors support whatever you’re trying to do and you’re productive from day zero. Sure, there are annoyances from time to time, but they’re way fewer and simpler to deal with than the hoops you’ve to go through to get a minimal and viable/productive Linux desktop experience. It all comes down to a question of how much time (days? months?) you want to spend fixing things on Linux that simply work out of the box under Windows for a minimal fee. Buy a Windows license and spend the time you would’ve spent dealing with Linux issues doing your actual job and you’ll, most likely, get a better ROI.

From a more market / macro perspective here are some extra reasons:

Unfortunately things are really poised and rigged against open-source solutions and anyone who tries to push for them. The "experts" who work in consulting companies are part of this as they usually don't even know how to do things without the property solutions. Let me give you an example, once I had to work with E&Y, one of those big consulting companies, and I realized some awkward things while having conversations with both low level employees and partners / middle management, they weren't aware that there are alternatives most of the time. A manager of a digital transformation and cloud solutions team that started his career E&Y, wasn't aware that there was open-source alternatives to Google Workplace and Microsoft 365 for e-mail. I probed a TON around that and the guy, a software engineer with an university degree, didn't even know that was Postfix was and the history of email.

You will never get the same font rendering on Linux as on Windows as Windows font rendering (ClearType) is very strange, complicated and covered by patents.

Font rendering is also kind of a subjective thing. To anyone who is used macOS, windows font rendering looks wrong as well. Apple's font rendering renders fonts much closer to how they would look printed out. Windows tries to increase readability by reducing blurriness and aligning everything perfectly with pixels, but it does this at the expense of accuracy.

Linux's font rendering tends to be a bit behind, but is likely to be more similar to macOS than to Windows rendering as time goes forward. The fonts themselves are often made available by Microsoft for using on different systems, it's just the rendering that is different.

For me, on my screens just by installing Segoe UI and tweaking the hinting / antialiasing under GNOME settings makes it really close to what Windows delivers. The default Ubuntu font, Cantarell and Sans don't seem to be very good fonts for a great rendering experience.

The following links may be of interest to you:

The technology has "been there" for a while, it's trivial do setup what you're asking for, the issue is that games have anti cheat engines that will get triggered by the virtualization and ban you.

Its literally a violation of the EU human rights agreement…

Is it? In Portugal there have been a similar law for years and nobody cares apparently. It isn't as wide as the Italian one, it just says ISPs are required to block access to websites a govt. entity lists.

Also no company will comply with that shitshows ridiculous orders.

Are you sure? Think about it... “All VPN and open DNS services must also comply with blocking orders”. A VPN provider can’t legally sell their services in Italy unless they comply. The best part is: since the govt is blocking websites they can also block providers who doesn’t play according to their rules :)

Stick with LibreOffice , you'll get used and it is most likely the best alternative.

So... this was the plan of the Standard Notes guys all along? Now it makes sense why they never made open-source and self-hosting a true priority.

Let's see what Proton does with this, but I personally believe they'll just integrate it in Proton and further close things even more. The current subscription-based model, docker container and whatnot might disappear as well. Proton is a greedy company that doesn't like interoperability and likes to add features designed in a way to keep people locked their Web UI and applications.

Standard Notes for self-hosting was already mostly dead due to the obnoxious subscription price, but it is a well designed App with good cross-platform support and I just wish the Joplin guy would take a clue on how to design UIs from them instead of whatever they're doing now that is ugly and barely usable.

“This is an isolated, ‘one-of-a-kind occurrence’ that has never before occurred with any of Google Cloud’s clients globally. This should not have happened.

I don't believe this is what that rare, what I believe is that this was the fist time it happened to a company with enough exposure to actually have in impact and reach the media.

Either way Google's image won't ever recover from this and they just lost what small credibility they had on the cloud space and won't be even considered again by any institution in the financial market - you know the people with the big money - and there's no coming back from this.

Thunderbird.

My understanding is that domains do expire unless you pay the fee to renew for another year.

The problem is that this isn't what happens today. If you register a domain and never pay for it again then providers will often renew the domain and keep it to themselves and try resell it later. This is one of the biggest issues in the domain name market and GoDaddy is one of the worst offenders.

Regarding unused domain names, how would anyone know if a particular name is being unused?

Yes that's a good question but I'm sure that ICANN with all it's wisdom and infinite resources and teams could define something reasonable. I believe the first step could be to simply make sure registrars can't do what I describe before.

It depends. They're simply the most annoying drives out there because Seagate on their wisdom decided to remove half of the SMART data from reports and they won't let you change the power settings like other drives. Those drives will never spin down, they'll even report to the system they're spun down while in fact they'll be still running at a lower speed. They also make a LOT of noise.

Here is my experience with it.

Up until last week I've had friends (who also use uBlock Origin, same country) kind of sequentially complaining for about a month about having the videos blocked. For me personally it has been working fine until then, but Friday I got the popup. Today the popup is gone however I get ads but they don't play video, only sound.

YouTube isn’t rolling out the anti-adblock to everyone. It seems to depend on things like your account, browser, and IP address. And if you’re not logged in or you’re in a private window, you’re safe. As a result, there are a bunch of people saying, “I use XYZ and I haven’t seen an anti-adblock popup yet,” unknowingly spreading misinformation.

What I see with this is that Google might eventually lose more with this new policies than just leaving things as they were. Lets be real, if this shit show continues and they don't drop it as it becomes increasingly difficult to watch without ads people will start looking for alternative frontends such as Piped or Invidious and that will hinder their ability to harvest data and force ads. What's the next step Google? DRM protected media?

Why does Linux run so well everywhere?

I'm just going to point out that besides containers, systemd can now manage virtual machines:

systemd version we added systemd-vmspawn. It's a small wrapper around qemu, which has the point of making it as nice and simple to use qemu as it is to use nspawn.

The idea is that we provide a roughly command line equivalent interface to VMs as for containers, so that it really is as easy to invoke a VM as it already is to invoke a container, supporting both boot from DDIs and boot from directories.

"You may not like it, but this is what peak performance looks like."

You next OS will be... Debian. Because you care about your time and you want stuff to be stable.

Why is Debian the way it is?

Because it's stable. Not some poorly bundled OS that has broken installers on their website for weeks like Ubuntu does sometimes.

He makes a few good points, developers are becoming hostages of those cloud platforms. We now have a generation of developers that doesn’t understand the basic of their tech stack, about networking, about DNS, about how to deploy a simple thing into a server that doesn’t use some Docker BS or isn’t a 3rd party cloud xyz deploy-from-github service.

Companies such as Microsoft and GitHub are all about re-creating and reconfiguring the way people develop software so everyone will be hostage of their platforms. We see this in everything now Docker/DockerHub/Kubernetes and GitHub actions were the first sign of this cancer.

Before anyone comments that Docker isn't totally proprietary and there's Podman consider the following: It doesn’t really matter if there are truly open-source and open ecosystems of containerization technologies. In the end people/companies will pick the proprietary / closed option just because “it’s easier to use” or some other specific thing that will be good on the short term and very bad on the long term.

The latest endeavor in making everyone’s hostage is the new Linux immutable distribution trend. Immutable distros are all about making thing that were easy into complex, “locked down”, “inflexible”, bullshit to justify jobs and payed tech stacks and a soon to be released property solution. We had Ansible, containers, ZFS and BTRFS that provided all the required immutability needed already but someone decided that is is time to transform proven development techniques in the hopes of eventually selling some orchestration and/or other proprietary repository / platform / Docker / Kubernetes does.

“Oh but there are truly open-source immutable distros” … true, but again this hype is much like Docker and it will invariably and inevitably lead people down a path that will then require some proprietary solution or dependency somewhere (DockerHub) that is only required because the “new” technology itself alone doesn’t deliver as others did in the past. Those people now popularizing immutable distributions clearly haven’t had any experience with it before the current hype. Let me tell you something, immutable systems aren’t a new thing we already had it with MIPS devices (mostly routers and IOTs) and people have been moving to ARM and mutable solutions because it’s better, easier and more reliable.

The RedHat/CentOS fiasco was another great example of this ecosystems and once again all those people who got burned instead of moving to a true open-source distribution like Debian decided to pick Ubuntu - it's just a matter of time until Canonical decides to do some move.

Nowadays, without Internet and the ecosystems people can’t even do shit anymore. Have a look at the current state of things when it comes to embedded development, in the past people were able to program Arduino boards offline and today everyone moved to ESP devices and depends on the PlatformIO + VSCode ecosystem to code and deploy to the devices.

Speaking about VSCode it is also open-source until you realize that 1) the language plugins that you require can only compiled and run in official builds of VSCode and 2) Microsoft took over a lot of the popular 3rd party language plugins, repackage them with a different license... making it so if you try to create a fork of VSCode you can’t have any support for any programming language because it won’t be an official VSCode build. MS be like :).

All those things that make development very easy and lowered the bar for newcomers have the dark side of being designed to reconfigure and envelope the way development gets done so someone can profit from it. That is sad and above all set dangerous precedents and creates generations of engineers and developers that don’t have truly open tools like we did.

The “experts” who work in consulting companies are part of this as they usually don’t even know how to do things without the property solutions. Let me give you an example, once I had to work with E&Y, one of those big consulting companies, and I realized some awkward things while having conversations with both low level employees and partners / middle management, they weren’t aware that there are alternatives most of the time. A manager of a digital transformation and cloud solutions team that started his career E&Y, wasn’t aware that there was open-source alternatives to Google Workplace and Microsoft 365 for e-mail. I probed a TON around that and the guy, a software engineer with an university degree, didn’t even know that was Postfix was and the history of email.

postmarketOS just gained my respect. To be fair there's no point in running a Linux system without systemd as you'll end up installing 32434 different RAM wasting services to handle things like cron, dns, ntp, mounts, sessions, log management etc.

They forgot the part where margins should be included on things... once again.