Can somebody solve this puzzle?

No no.. Don't blame yourself. You did nothing wrong here. Very scientifically speaking we still have no clear answer on how the sexuality of a person is determined. So far there is a consensus that there is a biological factor also in play.

It is not your failure as a partner. These are things beyond your control. She also can't do much on this. Therapy won't change the underlying reality. It will just help you to cope up with the hard realities that you are facing.

I highly recommend you take individual therapy if you haven't done so far. You may have to untangle decades of experiences to get in terms with it. It's never late, and the right therapist will definitely improve how you handle this.

Oh so sorry. I didn't realise you were talking about the situation you are in. I thought the first comment was just a thought experiment. I didn't pay enough attention. My bad.

In your case I guess she can be in the asexual part of the spectrum. One of my friends is facing a similar situation. The partner has no sex drive at all. But the partner is a great person in every other area. That relationship sustained because my friend also has a lower sex drive, but more than what the partner has.

Since this has been so long, I assume you have already tried the couple therapy and individual therapy. If not that is one thing you can try out.

But keep in mind that if your partner is really asexual, there isn't much that you can do. It's not their fault in any way. So either you have to accept the situation and build a life around this fact, or you have to move on. Since you have been in the relationship for a long time, I guess everything else is going well. Means you have already chosen the first option.

Couple of pointers. One, if she is on any medication, check for any side effects. That includes any birth control pills. Two, you mentioned neither she masturbates nor she has experience, hence I would suggest that she may try masturbating if she is okay to try out. That may uncover more about her body.

Also if she lacks experience, it can take months for her to be completely comfortable and enjoy it, because relaxing is not very easy for everyone.

I think the point here is to give it more time and explore as much as possible. No conclusive statements, like this method will work or will not work, cannot be and should not be made considering the smaller time frame they have been together. There are multiple options the OP and the partner can try out if they want.

TBH I am glad the OP is thinking about the long term. The more they explore, the more they will be certain what they can and cannot.

I don't think that is how it works.

Some info on how these top level domains came into existence can be learned from this video

You'll never guess the most popular internet country code

There are many ccTLD retained by ICAN after the country became non-existent. The Soviet Union is an example.

For those who are confused, the comment meant to say

52*51*50*....*3*2*1

i.e. 52 × 51 × 50 × ... × 3 × 2 × 1

Markdown syntax screwed it up.

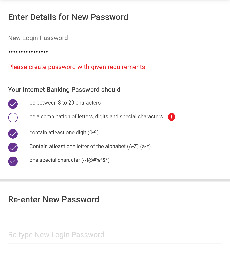

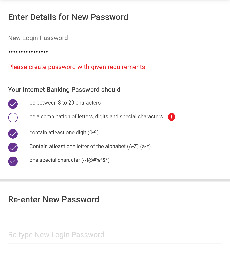

Holy shit!! You did it. I would never expect a banking password to max special characters. I have been scratching my head with Bitwarden and this shitty app for an hour.

Their desktop site is even more shitty. It won't allow right click or paste actions. There goes compatibility with password managers.

I think this meme is about the old story of calling 911 and pretending to order a pizza. This was a viral story almost a decade back and stayed in social media platforms as a real thing. However this widespread social media publicity actually helped a victim. Details are in the previous link. This worked only because the operator was aware about the internet lore and was able to connect it.

In summary don't do it. Operators will disregard your call.

He is the founder of a controversial satirical website called NordPresse. Well known for making up stuff and creating confusions in online space.

"I hope this message finds you well", is a marker I have been using to detect GPT replies. Looks like this is from ChatGPT.

Also it says "BLOB feature" and "BLOB functionality". What in the coconut does that mean? "BLOB feature is an important aspect for our app." Come on..

It's really pathetic that they didn't even try to read it at least once.

Yeah that's true. The UI does not accurately represent the validation conditions.

Oh fun fact, Govt also issued an order stating that VPN providers who won't log information of users, can't function in India.

What about radon and radioactivity in granite countertops?

Recently I came to know about this.

Edit: I should have added an explanation. It is only an issue when the ventilation is very low. Accumulation of Radon gas is the only thing to be worried about.

I read through your comments and the reply from devs regarding OSM. I will add a few points that can be part of the feature request. I have some experience dealing with maps, and my understanding is you can set up an offline version of OSM, which will get updated only when required.

leaflet.offline is a library which provides a similar functionality. I think with some modifications this can be implemented to significantly reduce the load on OSM that using it directly.

Even with a very large zoom level say 11 to 15, a large area of maps takes like a few hundred MBs. We once cached the entire region of California with all the details and it was around 240 MB IIRC. But Immich does not need this much details and it is possible to restrict zoom levels to certain details.

For someone self hosting several hundreds of GBs of photos, this should be doable without using too much storage. I think the problem will be that this is a huge engineering effort. Depending on the priority of the feature it may not be easy to do this.

There is a site called Switch2OSM which details almost everything you need to know. The previous link is on how to serve map tiles on your own. Again it is a daunting task and not suitable for everyone.

If anyone needs a live update of OSM as things get added, look into the commercial offerings.

In conclusion, it is possible to include a highly optimised version of OSM, instead of putting their servers under heavy load. The catch is, it is not easy and will need a huge engineering effort. I think developers should take a call on this.

I thought this story cannot be more fucked up. But this is a whole different level. Don't go in if you find the article in the original post is unbearable. I'm sharing because I cannot bend my mind around the level of horrors humans are capable of.

Woman describes horror of learning husband drugged her so others could rape her

This is something people always miss in these discussions. A graphic designer working for a medium marketing company is replaceable with a Stable Diffusion or Midjourney, because there, quality is not really that important. They work on quantity and "AI" is much more "efficient" in creating the quantity. That too even without paying for stock photos.

High end jobs will always be there in every profession. But the vast majority of the jobs in a sector do not belong to the "high end" category. That is where the job loss is going to happen. Not for Beeple Crap level artists.

I completely agree with this. I work as a User Experience researcher and I have been noticing this for some time. I'm not a traditional UX person, but work more at the intersection of UX and Programming. I think the core problem when it comes to discussion about any software product is the people talking about it, kind of assuming everyone else functions the same.

What you mentioned here as a techie, in simple terms is a person who uses or has to use the computer and file system everyday. They spend a huge amount of time with a computer and slowly they organise stuff. And most of the time they want more control over their stuff, and some of them end up in Linux based systems, and some find alternative ways.

There are two other kinds of people. One is a person who uses the computer everyday but is completely limited to their enterprise software. Even though they spend countless hours on the computer, they really don't end up using the OS most of the time. A huge part of the service industry belongs to this group. Most of the time they have a dedicated IT department who will take care of any issue.

The third category is people who rarely use computers. Means they use it once or twice in a few days. Almost all the people with non-white collar jobs belong to this category. This category mainly uses phones to get daily stuff done.

If you look at the customer base of Microsoft, it's never been the first. Microsoft tried really hard with .NET in the Balmer era, and even created a strong base at that time, but I am of the opinion that a huge shift happened with wide adoption of the Internet. In some forum I recently saw someone saying, TypeScript gave Microsoft some recognition and kept them relevant. They made some good contributions also.

So as I mentioned the customer base was always the second and third category. People in these categories focus only on getting stuff done. Bare minimum maintenance and get results by doing as little as possible. Most of them don't really care about organising their files or even finding them. Many people just redownload stuff from email, message apps, or drives, whenever they need a file. Microsoft tried to address this by indexed search inside the OS, but it didn't work out well because of the resource requirements and many bugs. For them a feature like Recall or Spotlight of Apple is really useful.

The way Apple and even Android are going forward is in this direction. Restricting the user to the surface of the product and making things easy to find and use through aggregating applications. The Gallery app is a good example. Microsoft knew this a long back. 'Pictures', 'Documents' and all other folders were just an example. They never 'enforced' it. In earlier days people used to have separate drives for their documents because, Windows did get corrupted easily and when reinstalling only the 'C:' drive needs to be formatted. Only after Microsoft started selling pre-installed Windows through OEMs, they were able to change this trend.

Windows is also pushing in this same direction. Limiting users to the surface, because the two categories I mentioned don't really 'maintain' their system. Just like in the case of a car, some people like to maintain their own car, and many others let paid services to take care of it. But when it comes to 'personal' computers, with 'personal' files, a 'paid' service is not an option. So this lands on the shoulders of the OS companies as an opportunity. Whoever gives a better solution people will adopt it more.

Microsoft is going to land in many contradictions soon, because of their early widespread adoption of AI. Their net zero global emission target is a straightforward example of this.

Open media vault and monero? But why?

Also Ollama in a 10 year old laptop will be fun.

git commit --amend --no-edit

This helped me countless times...

This is a bit absurd. I really don't think this is as serious as some comments say. Also there is a comment from AUR package manager which explains more details. . And even the blobs in the first post there are source and build instructions in their respective folder.

Its been like this for a long time. I still find it difficult to access raw.github. the reversal is not proper as far as I can say.

Edit: checked now, still can't.

Off topic and pedantic question. I'm not a native english speaker so, please don't take this in any other way.

In the last sentence you said "hero to women". Is that the correct usage? Or should it be " heroine to women"?

Aah I should have clarified more. My bad.

This is only an issue when the ventilation is very low, means when accumulation of Radon gas happens in some form. In spaces with ventilation it should not be a problem.

But Radon is "one of the leading causes of lung cancer, in non-smokers" . So not critical, but caution must be there is what I understood.

I have a different experience. There was one thread which linked to a github issue. The issue said some blobs don't have source code. Ironically when I went on to check, the blobs mentioned in the issue had source code, but there were other blobs which seemed to miss the source or build instructions.

I would love to have an independent audit to put this issue at rest. All that happens is more and more noise and no resolution. I am not a programmer so can't really help here.

I also didn't understand the logic here. Why did they "did not want to alarm them"? Is it because a one person company can simply fix the issue and not report to any other authority? What is the rationale behind it?

Giving you the benefit of doubt here.

"/s" technically means "this comment is sarcastic in nature". But also commonly used with bad jokes and puns as well, indicating that this is indeed nonsense, and I'm aware of it, but I decided to say it anyway.

The previous comment was just a bad and cliche joke that is common in internet forums, referencing the "Skynet" from the "Terminator" movie series.

Even though I don't completely support what the other person said, the defense you are making here is dangerous. It's not gatekeeping or anything like elitism, which is the argument of the other person. I don't see the point of arguing with them regarding it.

So here you said 'biting more than you can chew'. The fundamental problem I see here, which is something people say about Linux also, is that the entry barrier is pretty high. Most of the time it stems from lack of easy to access documentation in the case of Linux. But when it comes to some specific projects, the documentation is incomplete. Many of the self hostable applications suffer from this.

People should be able to learn their way to chew bigger things. That is how one can improve. Most people won't enjoy a steep learning curve. Documentation helps to ease this steepness. Along with that I completely agree with the fact that many people who figure out things, won't share or contribute into the documentation.

My point is in such scenarios, I think we should encourage people to contribute into the project, instead of saying there are easier ways to do it. Then only an open source project can grow.

Aah.. I completely forgot about that. Will try next time. Also yesterday I saw Shift + F10 will show the context menu. Yet to test it on this site.

Since I am not from the western hemisphere, I find it difficult to understand what is wrong with the name. Is it just that it sounds bad? Or any other reason?

Your tricks won't work, skynet! 😜 /s

If you have used AnyDesk in the past, this gives the same experience. Recently used it and has a lot of features, including unattended access.

They recommend self hosting an instance for better performance.

It uses the Bing API for results. DDG is just a front end for Bing.

Why? Can you elaborate?

Edit: oh, I automatically read it as J. Herkenhoff, and started to wonder what is the problem with the name.

TBH I felt this is something they made up once it got more attention. If they had felt remorse, they might have come back to apologise or correct their mistake, sometime in the past two weeks I guess.

Who knows maybe they are really ill. Maybe they just made everything up.

If you meant mega.nz or mega.io , it was founded by him but around 2013 he cut all the ties with the site. Now it is completely independent of him.

Im using a raspberry pi with a binary installation of Forgejo. Pretty easy to set up if you are comfortable with the terminal.

The project already started to diverge. If you have an older version don't update until you migrate.

I would say exactly that is what you have to describe. As I said certain things cannot be changed with therapy. It can only help you to get in terms with it.

Regarding the last point you mentioned. You are not giving up on her. Exerting constant pressure can't change certain realities. It is like thinking you can drain an ocean with a bucket and a lot of time.

You have to accept that there is nothing 'wrong' with your partner. If she is asexual there is nothing to 'cure'. You must build your life around this fact to be happy.

This does not mean that your needs should be discarded. In the same way you accept and respect the fact that she is asexual, she also has to take a mature stand and work on finding common grounds or compromises. That is how relationships work, isn't it?

You start therapy. Remember that you will need to find a suitable therapist. So don't hesitate to change therapists until you find one you are comfortable with. Maybe the therapist can help you on how this topic needs to be discussed with your partner. That may slowly open up new ways to improve the conditions.