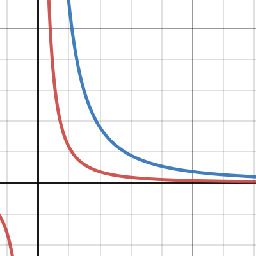

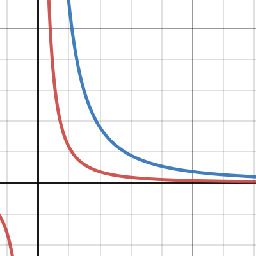

Interactive graph of the lemmy hot rank algorithm

Isn’t it always the plan for Dems to unseat Republicans? That’s how the 2 party system works no?

They probably trained it using data from their Coca-Cola freestyle dispensers if you’ve used one. That’s my guess.

How is this news? Some random US official suggested something??? Yall gotta stop with this bullshit.

I’m confused how this is relevant. Just pointing out this is a bad take, not saying nukes are the same as AI. chatGPT isn’t the only AI out there btw. For example NYC just allowed the police to use AI to profile potential criminals… you think that’s a good thing?

Yeah I think its an important distinction to make though considering the US can definitely use that kind of money, and it might hurt to be struggling in the US and read that the government is giving away money. With weapons though, it doesn’t even feel like we’re giving anything away. Ukraine is fighting our enemy for us.

They should fine them at least a billion then

That’s a good point, however just because the bad thing hasn’t happened yet, doesn’t mean it wont. Everything has pros and cons, it’s a matter of whether or not the pros outweigh the cons.

Why do they think I got one in the first place?

I don’t need to know the ins and outs of how the nazi regime operated to know it was bad for humanity. I don’t need to know how a vaccine works to know it’s probably good for me to get. I don’t need to know the ins and outs of personal data collection and exploitation to know it’s probably not good for society. There are lots of examples.

Which app are you using? This also isn’t the right place to post this question. If there’s a community for your app I would ask there.

Edit: there’s a pinned post at the top of this community that specifically says not to post support questions here.

That seems a little overkill, especially since it’s a feature I doubt very many people would use. Just having it separate for each account would be sufficient, require less processing power and battery, and less time to code.

Doesn’t seem like theres any actual money included

What companies have decided to call AI is not at all the same as what AI used to refer to and what science fiction stories refer to.

Its also more likely to have issues like this because it’s porous and grows on the ground

It’s not like AI is using works to create something new. Chatgpt is similar to if someone were to buy 10 copies of different books, put them into 1 book as a collection of stories, then mass produce and sell the “new” book. It’s the same thing but much more convoluted.

Edit: to reply to your main point, people who make things should absolutely be able to impose limitations on how they are used. That’s what copyright is. Someone else made a song, can you freely use that song in your movie since you listened to it once? Not without their permission. You wrote a book, can I buy a copy and then use it to make more copies and sell? Not without your permission.

What’s this from?

I’ve heard about it but have luckily yet to stumble upon any. If you’re refering to furry porn (humanoid animal characters) instead of bestiality, I know the furry community has grown in the past few years, and imo it doesn’t seem to harm anyone, so I don’t really care. Cartoon CP is a completely different issue, and while it seems abhorrent, I’ve seen arguments that it could reduce real life child sex abuse. Not sure how true this is, but at least it’s not CSAM.

That being said, since lemmy is defederated it’s much more difficult to moderate. In addition, I don’t think lemmy has strong moderator tools yet (from what I’ve heard). I know they’ve recently introduced code to identify and block CSAM before it’s posted, and I believe future improvements will be made, such as better mod tools etc. Also, part of the issue is the relatively low user activity.

My suggestion for you is to find an instance that has a rule against the types of content you’d like to avoid, and defederates from instances that allow it.

They could add an imperceptible audio watermark

I don’t think most people think ai is sentient. In my experience the people that think that are the ones who think they’re the most educated saying stuff like “neural networks are basically the same as a human brain.”

Yeah sure “guns don’t kill people, people kill people” is an outrageous take.

If it were trained on a single book, the output would be the book. That’s the base level without all the convolution and that’s what we should be looking at. Do you also think someone should be able to train a model on your appearance and use it to sell images and videos, even though it’s technically not your likeness?

A few points:

Humans are more than just a brain. There’s the entire experience of ego, individualism, and body

Another massive distinction is autonomy and liberty, which no AI models currently possess.

We don’t know all there is to know about the human brain. We can’t say it is functionally equivalent to a neural network.

If complexity is irrelevant, then the simplest neural network trained on a single work of writing is equivalent to the most advanced models for the purposes of this discussion. Such a network would, again, output a copy of the work it was trained on

When we’ve developed a true self-aware AI that can move and think freely, the idea that there is little difference will have more weight to it.

I don’t disagree that people are stupid, but the majority of people got/supported the vaccine. Majority is sometimes a good indicator, that’s how democracy works. Again, it’s not perfect, but it’s not useless either.

I’ve coded LLMs, I was just simplifying it because at its base level it’s not that different. It’s just much more convoluted as I said. They’re essentially selling someone else’s work as their own, it’s just run through a computer program first.

There’s a lot of apps that let you filter words or phrases if it’s not built into lemmy

There are a lot more countries than yours, believe it or not, and some of them don’t have the same justice system as yours. Do people in your country have the right to vote? Same sentiment, do you think that’s a stupid system?

It can be changed. If it had no/higher limit, the slider would move in greater increments, so less fine control.

There’s a lot of apps that let you filter words or phrases if it’s not built into lemmy

I agree with what you’re saying, and a model that is only trained on public domain would be fine. I think the very obvious line is that it’s a computer program. There seems to be a want for computers to be human but they aren’t. They don’t consume media for their own enjoyment, they are forced to do it so someone can sell the output as a product. You can’t compare the public domain to life.

Are you familiar with juries?

No, someone emulating someone else’s style is still going to have their own experiences, style, and creativity make their way into the book. They have an entire lifetime of “training data” to draw from. An AI that would “emulate” someone else’s style would really only be able to refer to the author’s books, or someone else’s books, therefore it’s stealing. Another example: if someone decided to remix different parts of a musician’s catalogue into one song, that would be a copyright infringement. AI adds nothing beyond what it’s trained on, therefore whatever it spits out is just other people’s works in a different way.

You don’t have to understand how an atomic bomb works to know it’s dangerous