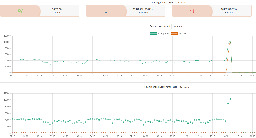

I setup a Friendica instance earlier in the week. Turns out the DNS queries had increased somewhat dramatically

I set up friendica as my first foray on to the fediverse. It worked well, but as it turns out doesn't work that well with Lemmy, which was my main usecase. Well whilst trying to fix DNS issues setting up a Lemmy instance instead, I noticed my DNS logs were rather full. My Unbound DNS was getting 40k requests every 10 mins to *.activitypub-troll.cf. I don't know who or what that is, but blocking it didn't reduce the activity. At first I thought it was something to do with Lemmy as I'd forgotten I still had Friendica running. Thankfully stopping the Friendica service reduced the DNS request back to normal.

So if you've set something up recently, you might want to check if there have been any consequences in your service logs

Right now I'm hosting the following:

The next project will probably be a private Lemmy instance.

Good luck with setting up Lemmy! Their documentation is terrible and the docker-compose.yml files are not fit for purpose. Then if you already have Nginx as a reverse proxy setup (as I do), then if just seems to get worse. If I get time I'll write up and publish my understanding and config.

While it's true that their tutorial contains some errors, it's not all that hard to set up imo.

Basically, they expose the wrong ports in the nginx section (should be 80, not the ui / backend ports). Also, the compose file assumes you are building the Lemmy image yourself, to change this, you have to comment out the lines in the "build" section under Lemmy and enable the "image" line. And you have to set the database user and pw in the Lemmy config file.

Regarding your usage of nginx: while I use apache myself, the config should be comparable and comes down to setting up a reverse proxy to the port which you have bound the nginx container to (so whatever you expose container port 80 as). While this means that you will effectively have two instances of nginx running, one as the internal proxy for Lemmy and one as the reverse proxy for external access, it will work flawlessly in my experience.

I am new to the fediverse, and I don't use Friendica, so I could be entirely wrong about this. However, from what is described, perhaps Friendica has some sort of feature in which would trigger your instance to go out to fetch some data from another instance. Someone exploited this feature, spammed your instance with content from assortment of subdomains on the

*.activitypub-troll.cfdomain, and most if not all of them are probably non-existent. As result of that, your server is re-checking every 10 minutes to see if they've came back online. This would also explain why shutting down the Friendica service resolved the problem for you.That's about what I concluded too. I do wonder if the fediverse grows to tens / hundreds of millions of users, just how scalable the networking will be - and how susceptible to DDOS. I haven't a detailed understanding of the communication protocols, I've just noticed a reasonable amount of traffic in the log files of my single user Lemmy instance.

Yeah, the entire setup is quite finicky still. Part of me thinks Fediverse is forced into the spotlight by Twitter (Mastadon) and Reddit (Lemmy), and the whole thing is not quite baked yet. Don't get me wrong, having a more open space is great, but there are so many things that's not quite ready for prime time. I hope the dev team behind the platform (not the self hosted instance admins) will be more open to ideas and rapidly improve the platform.

The dev team are changing things at will but the documentation is pointing users at the latest master version.

There needs to be better change control and a bigger emphasis on supporting people setting up production environments, not just dev ones. Which means nothing should be broken in whatever labelled version of a file they use.

Amen to that. I totally hear you. There are SO many things I think could be done better. I just hope the dev team is ready to embrace the spotlight, and keep up with all the demands without burning out!

What are you using for your DNS TTL value?

These were queries coming out of the Friendica instance and hitting my DNS and then being forwarded to upstream. I don't think I can control the TTL on that

Haha, mines gone up just a bit too since running Lemmy :D