I work for a fairly big IT company. They're currently going nuts about how generative AI will change everything for us and have been for the last year or so. I'm yet to see it actually be used by anyone.

I imagine the new Microsoft Office copilot integration will be used only slightly more than Clippy was back in the day.

But hey, maybe I'm just an old man shouting at the AI powered cloud.

Copilot is often a brilliant autocomplete, that alone will save workers plenty of time if they learn to use it.

I know that as a programmer, I spend a large percentage of my time simply transcribing correct syntax of whatever’s in my brain to the editor, and Copilot speeds that process up dramatically.

problem is when the autocomplete just starts hallucinating things and you don't catch it

If you blindly accept autocompletion suggestions then you deserve what you get. AIs aren’t gods.

AI's aren't god's.

Probably will happen soon.

OMG thanks for being one of like three people on earth to understand this

you don’t catch it

That's on you then. Copilot even very explicitly notes that the ai can be wrong, right in the chat. If you just blindly accept anything not confirmed by you, it's not the tool's fault.

I feel like the process of getting the code right is how I learn. If I just type vague garbage in and the AI tool fixes it up, I'm not really going to learn much.

Autocomplete doesn’t write algorithms for you, it writes syntax. (Unless the algorithm is trivial.) You could use your brain to learn just the important stuff and let the AI handle the minutiae.

Where "learn" means "memorize arbitrary syntax that differs across languages"? Anyone trying to use copilot as a substitute for learning concepts is going to have a bad time.

AI can help you learn by chiming in about things you didn't know you didn't know. I wanted to compare images to ones in a dataset, that may have been resized, and the solution the AI gave me involved blurring the images slightly before comparing them. I pointed out that this seemed wrong, because won't slight differences in the files produce different hashes? But the response was that the algorithm being used was perceptual hashing, which only needs images to be approximately the same to produce the same hash, and the blurring was to make this work better. Since I know AI often makes shit up I of course did more research and tested that the code worked as described, but it did and was all true.

If I hadn't been using AI, I would have wasted a bunch of time trying to get the images pixel perfect identical to work with a naive hashing algorithm because I wasn't aware of a better way to do it. Since I used AI, I learned more about what solutions are available, more quickly. I find that this happens pretty often; there's actually a lot that it knows that I wasn't aware of or had a false impression of. I can see how someone might use AI as a programming crutch and fail to pay attention or learn what the code does, but it can also be used in a way that helps you learn.

I use AI a lot as well as a SWE. The other day I used it to remove an old feature flag from our server graphs along with all now-deprecated code in one click. Unit tests still passed after, saved me like 1-2 hours of manual work.

It's good for boilerplate and refactors more than anything

AI bad tho!!!

A friend of mine works in marketing (think "websites for small companies"). They use an LLM to turn product descriptions into early draft advertising copy and then refine from there. Apparently that saves them some time.

It saves a ton of time. I've worked with clients before and I'll put a lorem ipsum as a placeholder for text they're supposed to provide. Then the client will send me a note saying there's a mistake and the text needs to be in English. If the text is almost close enough to what the client wants, they might actually read it and send edits if you're lucky.

I've actually pushed products out with lorem ipsum on it because the client never provided us with copy. As you say they seem to think that the copy is in there, but just in some language they can't understand. I don't know how they can possibly think that since they've never sent any, but if they were bright they wouldn't work in marketing.

The problem with GenAI is the same as any system. Garbage in equals garbage out. Couple it with no tuning and it’s a disaster waiting to happen. Good GenAI can exist, but you need some serious data science and time to tune it. Right now that puts the cost outside of the “do it by hand” realm (and by quite a bit). LLMs are useful given that they’ve been trained on general human writing patterns, but for a company to be able to replace their functions with highly specific tasks they need to develop and push their own data sets and training which they don’t want to spend the money on.

I'm a developer with about 15 years of experience. I got into my company's copilot beta program.

Now maybe you are some magical programmer that knows everything and doesn't need stack overflow, but for me it's all but completely replaced it. Instead of hunting around for a general answer and then applying it to my code, I can ask very explicitly how to do that one thing in my code, and it will auto generate some code that is usually like 90% correct.

Same thing when I'm adding a class that follows a typical pattern elsewhere in my code...well it will auto generate the entire class, again with like 90% of it being correct. (What I don't understand is how often it makes up enum values, when it clearly has some context about the rest of my code) I'm often shocked as to how well it knew what I was about to do.

I have an exception thats not quite clear to me? Well just paste it into the copilot chat and it gives a very good plain English explanation of what happened and generally a decent idea of where to look.

And this is a technology in it's infancy. It's only been released for a little over a year, and it has definitely improved my productivity. Based on how I've found it useful, it will be especially good for junior devs.

I know it's in, especially on lemmy, to shit on AI, but I would highly recommend any dev get comfortable with it because it is going to change how things are done and it's, even in its current form, a pretty useful tool.

It's in to shit on AI because it's ridiculously overhyped, and people naturally want to push back on that. Pretty much everyone agrees it'll be useful, just not replace all the jobs useful.

And a chunk of the jobs it will replace were on their way out the door anyway. There are already plenty of fast food places with kiosks to order, and they haven't replaced any specific person, just a small function of one job.

I expect it'll be useful on the order of magnitude of Google Search, not revolutionary on the scale of the internet. And I think that's a reasonable amount of credit.

It will take the market by storm, just like NFT with bored apes.

I'd love a boosted Clippy powered by AI! It would have incredible animations while sitting there in corner doing nothing!

I’m yet to see it actually be used by anyone.

None of your programmers are using genAI to prototype, analyze errors or debug faster? Either they are seriously missing out or you're not following.

I think the "AI will revolutionize everything" hype is stupid, but I definitely get a lot of added productivity when coding with it, especially when discovering APIs. I do have to double-check, but overall I'm definitely faster than before. I think it's good at reducing the mental load of starting a new task too, because you can ask for some ideas and pick what you like from it.

Mine aren't. Because it has been mandated by the execs not to because there is a potential security risk in leaking our code to AI servers.

My company has an agreement with a genAI provider so the data won't be leaked, we have an internal website, it's not the public one. We can also add our own data to the model to get results relevant to the company's knowledge.

I was watching this morning's WAN podcast (linustechtips) and they had an interview with Jim Keller talking mostly about AI.

The portions about AI felt like he was living in an alternate universe, predicting AI will be used literally everywhere.

My bullshit-o-meter hit the stars but comments on the video seem positive 🤔

I think it massively depends on what your job is. I know quite a lot of people at work use AI to to draft out documents, it's a good way to get started. I also suspect that quite a lot of documents are 100% AI since we have a lot of stuff that we write but no one ever reads, so what's the point in putting effort in?

I tried to use it to write some documentation for various processes at work but the AI doesn't know about our processes and I couldn't figure out a way to tell it about our processes and so it either missed steps or just made stuff up so for me it's not really useful.

So it works as long as you don't need anything too custom. But then we have engineers that go out to businesses and presumably they don't use AI for anything because there's nothing it can do that would be useful for them.

So right there in one business you have three groups of people, people who use AI a lot, people who've tried to use AI and don't find it useful, and people who basically have no use for AI.

I have one guy using AI to generate a status report by compiling all his report’ statuses!

I’m hoping to be one of the people to benefit, if Security would approve AI. As a DevOps guy, I’m continually jumping among programming and scripting languages and it sometimes takes a bit to change context. If I’m still in Python mode, why shouldn’t I get a jump by AI translating to Java, or Groovy, or Go, or PowerShell, or whatever flavor of shell script? As the new JavaScript “expert” at my company, why can’t I continue avoiding Learning JavaScript?

I work for a fairly big IT company. They're currently going nuts about how generative AI will change everything for us and have been for the last year or so. I'm yet to see it actually be used by anyone.

I imagine the new Microsoft Office copilot integration will be used only slightly more than Clippy was back in the day.

But hey, maybe I'm just an old man shouting at the AI powered cloud.

Copilot is often a brilliant autocomplete, that alone will save workers plenty of time if they learn to use it.

I know that as a programmer, I spend a large percentage of my time simply transcribing correct syntax of whatever’s in my brain to the editor, and Copilot speeds that process up dramatically.

problem is when the autocomplete just starts hallucinating things and you don't catch it

If you blindly accept autocompletion suggestions then you deserve what you get. AIs aren’t gods.

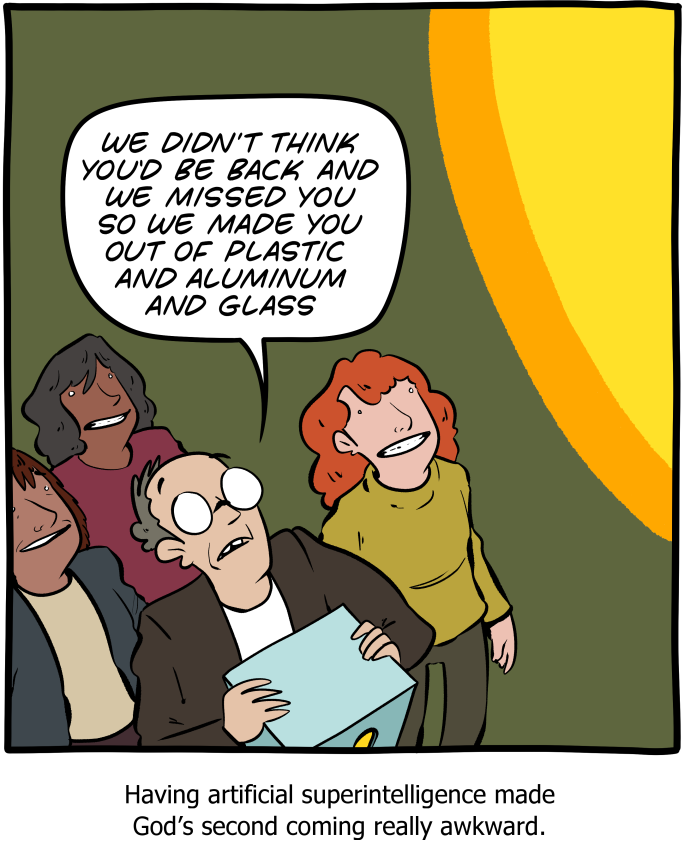

Probably will happen soon.

OMG thanks for being one of like three people on earth to understand this

That's on you then. Copilot even very explicitly notes that the ai can be wrong, right in the chat. If you just blindly accept anything not confirmed by you, it's not the tool's fault.

I feel like the process of getting the code right is how I learn. If I just type vague garbage in and the AI tool fixes it up, I'm not really going to learn much.

Autocomplete doesn’t write algorithms for you, it writes syntax. (Unless the algorithm is trivial.) You could use your brain to learn just the important stuff and let the AI handle the minutiae.

Where "learn" means "memorize arbitrary syntax that differs across languages"? Anyone trying to use copilot as a substitute for learning concepts is going to have a bad time.

AI can help you learn by chiming in about things you didn't know you didn't know. I wanted to compare images to ones in a dataset, that may have been resized, and the solution the AI gave me involved blurring the images slightly before comparing them. I pointed out that this seemed wrong, because won't slight differences in the files produce different hashes? But the response was that the algorithm being used was perceptual hashing, which only needs images to be approximately the same to produce the same hash, and the blurring was to make this work better. Since I know AI often makes shit up I of course did more research and tested that the code worked as described, but it did and was all true.

If I hadn't been using AI, I would have wasted a bunch of time trying to get the images pixel perfect identical to work with a naive hashing algorithm because I wasn't aware of a better way to do it. Since I used AI, I learned more about what solutions are available, more quickly. I find that this happens pretty often; there's actually a lot that it knows that I wasn't aware of or had a false impression of. I can see how someone might use AI as a programming crutch and fail to pay attention or learn what the code does, but it can also be used in a way that helps you learn.

I use AI a lot as well as a SWE. The other day I used it to remove an old feature flag from our server graphs along with all now-deprecated code in one click. Unit tests still passed after, saved me like 1-2 hours of manual work.

It's good for boilerplate and refactors more than anything

AI bad tho!!!

A friend of mine works in marketing (think "websites for small companies"). They use an LLM to turn product descriptions into early draft advertising copy and then refine from there. Apparently that saves them some time.

It saves a ton of time. I've worked with clients before and I'll put a lorem ipsum as a placeholder for text they're supposed to provide. Then the client will send me a note saying there's a mistake and the text needs to be in English. If the text is almost close enough to what the client wants, they might actually read it and send edits if you're lucky.

I've actually pushed products out with lorem ipsum on it because the client never provided us with copy. As you say they seem to think that the copy is in there, but just in some language they can't understand. I don't know how they can possibly think that since they've never sent any, but if they were bright they wouldn't work in marketing.

The problem with GenAI is the same as any system. Garbage in equals garbage out. Couple it with no tuning and it’s a disaster waiting to happen. Good GenAI can exist, but you need some serious data science and time to tune it. Right now that puts the cost outside of the “do it by hand” realm (and by quite a bit). LLMs are useful given that they’ve been trained on general human writing patterns, but for a company to be able to replace their functions with highly specific tasks they need to develop and push their own data sets and training which they don’t want to spend the money on.

I'm a developer with about 15 years of experience. I got into my company's copilot beta program.

Now maybe you are some magical programmer that knows everything and doesn't need stack overflow, but for me it's all but completely replaced it. Instead of hunting around for a general answer and then applying it to my code, I can ask very explicitly how to do that one thing in my code, and it will auto generate some code that is usually like 90% correct.

Same thing when I'm adding a class that follows a typical pattern elsewhere in my code...well it will auto generate the entire class, again with like 90% of it being correct. (What I don't understand is how often it makes up enum values, when it clearly has some context about the rest of my code) I'm often shocked as to how well it knew what I was about to do.

I have an exception thats not quite clear to me? Well just paste it into the copilot chat and it gives a very good plain English explanation of what happened and generally a decent idea of where to look.

And this is a technology in it's infancy. It's only been released for a little over a year, and it has definitely improved my productivity. Based on how I've found it useful, it will be especially good for junior devs.

I know it's in, especially on lemmy, to shit on AI, but I would highly recommend any dev get comfortable with it because it is going to change how things are done and it's, even in its current form, a pretty useful tool.

It's in to shit on AI because it's ridiculously overhyped, and people naturally want to push back on that. Pretty much everyone agrees it'll be useful, just not replace all the jobs useful.

And a chunk of the jobs it will replace were on their way out the door anyway. There are already plenty of fast food places with kiosks to order, and they haven't replaced any specific person, just a small function of one job.

I expect it'll be useful on the order of magnitude of Google Search, not revolutionary on the scale of the internet. And I think that's a reasonable amount of credit.

It will take the market by storm, just like NFT with bored apes.

I'd love a boosted Clippy powered by AI! It would have incredible animations while sitting there in corner doing nothing!

None of your programmers are using genAI to prototype, analyze errors or debug faster? Either they are seriously missing out or you're not following.

I think the "AI will revolutionize everything" hype is stupid, but I definitely get a lot of added productivity when coding with it, especially when discovering APIs. I do have to double-check, but overall I'm definitely faster than before. I think it's good at reducing the mental load of starting a new task too, because you can ask for some ideas and pick what you like from it.

Mine aren't. Because it has been mandated by the execs not to because there is a potential security risk in leaking our code to AI servers.

My company has an agreement with a genAI provider so the data won't be leaked, we have an internal website, it's not the public one. We can also add our own data to the model to get results relevant to the company's knowledge.

I was watching this morning's WAN podcast (linustechtips) and they had an interview with Jim Keller talking mostly about AI.

The portions about AI felt like he was living in an alternate universe, predicting AI will be used literally everywhere.

My bullshit-o-meter hit the stars but comments on the video seem positive 🤔

I think it massively depends on what your job is. I know quite a lot of people at work use AI to to draft out documents, it's a good way to get started. I also suspect that quite a lot of documents are 100% AI since we have a lot of stuff that we write but no one ever reads, so what's the point in putting effort in?

I tried to use it to write some documentation for various processes at work but the AI doesn't know about our processes and I couldn't figure out a way to tell it about our processes and so it either missed steps or just made stuff up so for me it's not really useful.

So it works as long as you don't need anything too custom. But then we have engineers that go out to businesses and presumably they don't use AI for anything because there's nothing it can do that would be useful for them.

So right there in one business you have three groups of people, people who use AI a lot, people who've tried to use AI and don't find it useful, and people who basically have no use for AI.

I have one guy using AI to generate a status report by compiling all his report’ statuses!

I’m hoping to be one of the people to benefit, if Security would approve AI. As a DevOps guy, I’m continually jumping among programming and scripting languages and it sometimes takes a bit to change context. If I’m still in Python mode, why shouldn’t I get a jump by AI translating to Java, or Groovy, or Go, or PowerShell, or whatever flavor of shell script? As the new JavaScript “expert” at my company, why can’t I continue avoiding Learning JavaScript?