AI Is Starting to Look Like the Dot Com Bubble

stopthatgirl7@kbin.social to

stopthatgirl7@kbin.social to

futurism.com

As the AI market continues to balloon, experts are warning that its VC-driven rise is eerily similar to that of the dot com bubble.

stopthatgirl7@kbin.social to

stopthatgirl7@kbin.social to

As the AI market continues to balloon, experts are warning that its VC-driven rise is eerily similar to that of the dot com bubble.

Just a reminder that the dot com bubble was a problem for investors, not the underlying technology that continued to change the entire world.

That's true, but investors have a habit of making their problems everyone else's problems.

Not that you're wrong per-se but the dotcom bubble didn't impact my life at all back in the day. It was on the news and that was it. I think this will be the same. A bunch of investors will lose their investments, maybe some adventurous pension plans will suffer a bit, but on the whole life will go on.

The impact of AI itself will be much further reaching. We better force the companies that do survive to share the wealth otherwise we're in for a tough time. But that won't have anything to do with a bursting investment bubble.

Lots of everyday normal people lost their jobs due to the bubble. Saying it only impacted the already rich investors is wrong.

There really isn't much that can harm rich people that won't indirectly do splash damage on other people, just because their actions control so much of the economy that people depend on for survival.

The dotcom bubble was one of the middle dominos on the way to the 2008 collapse, the fed dropped interest rates to near zero and kept them there for years, investor confidence was low, so here come mortgage backed securities.

In addition, the bubble bursting and its aftermath is what allowed the big players in tech (Amazon, Google, Cisco etc) to merge to monopoly, which hasn’t been particularly good

Good for you. But perhaps fuck off, because some of us lost jobs, homes, and financial stability.

Yeah. Note how we're having this conversation over the web. The bubble didn't hurt the tech.

This is something to worry about it you're an investor or if you're making big career decisions.

If you have a managed investment account, like a 401(k), it might be worth taking a closer look at it. There's no shortage of shysters in finance.

In Canada, a good example is cannabis industry. Talk about fucking up opportunities.

Why would it be any different with tech?

It’s about the early cash-grab, imo.

The best way to make money in the gold rush was selling shovels.

Same idea here. Nvidia is making bank.

And that's before you even point out that they were also making bank from the last gold rush

Good time to be in the GPU business

If nvda selling shovels, what is tsmc?

selling steel

And then you have ASML who sell the foundry equipment that makes the steel.

Cutting trees

shovel making equipment

they are the shovel

metaphors

Heard of something similar in the past. "Be the Arms dealer"

This kind of feels like a common sense observation to anyone that's been mildly paying attention.

Tech investors do this to themselves every few years. In literally the last 6-7 years, this happened with crypto, then again but more specifically with NFTs, and now AI. Hell, we even had some crazes going on in parallel, with self driving cars also being a huge dead end in the short term (Tesla's will have flawless, fully self-driving any day now! /S).

AI will definitely transform the world, but not yet and not for awhile. Same with self driving cars. But that being said, most investors don't even care. They're part of the reason this gets so hyped up, because they'll get in first, pump value, then dump and leave a bunch of other suckers holding the bags. Rinse and repeat.

I also don’t know why this is a surprise. Investors are always looking for the next small thing that will make them big money. That’s basically what investing is …

Indeed. And it's what progress in general is. Should we stop trying new things? Sometimes they don't work, oh well. Sometimes they do, and it's awesome.

Great point.

You're conflating creating dollar value with progress. Yes the technology moves the total net productivity of humankind forward.

Investing exists because we want to incentive that. Currently you and the thread above are describing bad actors coming in, seeing this small single digit productivity increase and misrepresenting it so that other investors buy in. Then dipping and causing the bubble to burst.

Something isn't a 'good' investment just because it makes you 600% return. I could go rob someone if I wanted that return. Hell even if then killed that person by accident the net negative to human productivity would be less.

These bubbles unsettle homes, jobs, markets, and educations. Inefficiency that makes money for anyone in the stock market should have been crushed out.

No, progress is being driven by investment, it isn't measured by investment. If some new startup gets a million billion dollars of investment that doesn't by itself represent progress. If that startup then produces a new technology with that money then that is progress.

These "investment rushes" happen when a new kind of technology comes along and lots of companies are trying to develop it in a bunch of different ways. There's lots of demand for investment in a situation like this and lots of people are willing to throw some money at them in hopes of a big return, so lots of investment happens and those companies try out a whole bunch of new tech with it. Some of them don't pan out, but we won't know which until they actually try them. As long as some of them do pan out then progress happens.

Just because some don't pan out doesn't mean that "bad actors" were involved. Sometimes ideas just don't work out and you can't know that they won't until you try them.

Perhaps we're talking to different points. Parent comment said that investors are always looking for better and better returns. You said that's how progress works. This sentiment is was my quibble.

I took the "investors are always looking for better returns" to mean "unethically so" and was more talking about what happens long term. Reading your above I think you might have been talking about good faith.

In a sound system that's how things work, sure! The company gets investment into tech and continue to improve and the investors get to enjoy the progress's returns.

That's not what I interpreted the parent as saying. He said

Which I think my interpretation fits just fine - investors would like to put their money into something new that will become successful, that's how they make big money.

The word "ethical" has become heavily abused in discussions of AI over the past six months or so, IMO. It's frequently being used as a thought-terminating cliche, where people declare "such-and-such approach is how you do ethical AI" and then anyone who disagrees can be labelled as supporting "unethical" approaches. I try to avoid it as much as possible in these discussions. Instead, I prefer a utilitarian approach when evaluating these things. What results in the best outcome for the most number of people? What exactly is a "best outcome" anyway?

In the case of investment, I like a system where people put money into companies that are able to use that money to create new goods and services that didn't exist before. That outcome is what I call "progress." There are lots of tricky caveats, of course. Since it's hard to tell ahead of time what ideas will be successful and what won't, it's hard to come up with rules to prohibit scams while still allowing legitimate ideas have their chance. It's especially tricky because even failed ideas can still result in societal benefits if they get their chance to try. Very often the company that blazes a new trail ends up not being the company that successfully monetizes it in the long term, but we still needed that trailblazer to create the right conditions.

So yes, these "bubbles" have negative side effects. But they have positive ones too, and it's hard to disentangle those from each other.

I appreciate the effort, but I was not critiquing your reading. Moreso that I took it differently. That's just a misread on my part and my point was not about general investing as a proxy for progress/a driver.

No problem. I've got tangled up in "disagreements" where it turned out everyone was talking about different unrelated things before, hence the big blob of text elaborating my position in detail. Just wanted to make sure.

Yeah, I appreciate the nuance too! It's just I don't have anything to really add as I'm the one who misread!

The transformation will be subtle and steady. The hype will burst and crash.

Great write-up, thanks.

I want a shirt that just says "Not yet, and not for awhile." to wear to me next tech conference.

Bingo. We're very far from the point where it'll do as much as the general public expects when it hears AI. Honestly this is an informative lesson in just how easy it is to get big investors to part with their money.

It's starting to look like the crypto/nft scam because it is the same fucking assholes forcing this bullshit on all of us.

As someone that currently works in AI/ML, with a lot of very talented scientists with PhD's and dozens of papers against their name, it boils my piss when I see crypto cunts I used to know that are suddenly all on the AI train, trying to peddle their "influence" on LinkedIn.

never heard boil my piss before

I said the same thing. It feels like that. I wonder if there's some sociological study behind what has been pushing "wrappers" of implementations at high volume. Wrappers meaning, 90%+ of the companies are not incorporating Intellectual Property of any kind and saturating the markets with re-implementations for quick income and then scrapping. I feel this is not a new thing. But, for some reason it feels way more "in my face" the past 4 years.

NFTs in their mainstream form were the most cringe-worthy concept imaginable. A random artist makes a random ape which suddenly becomes a collectible, and all it happened to be was an S3 url on a particular blockchain? Which could be replicated on another chain? How did people think this was a smart thing to invest in?! Especially the Apes and rubbish?!

cryptonft is shit though. At least "A.I" have actual tech behind it.

What they are calling "ai" is usually not ai at all though...

yeah i know hence "ai"

Crypto had real tech behind it too. The reason it was bullshit wasn't that there wasn't serious tech backing it, it's that there was no use case that wasn't a shittier version of something else.

yeah "real tech". crypto/nft is not real as in it is useless. as of now. it is useful to criminal though.

A broken clock is right twice a day. The crypto dumbasses jump on every trend, so you still need to evaluate it on its own merits. The crypto Bros couldn't come up with a real world compelling use case over years and years, so that was obviously bullshit. Generative AI is just kicking off and there are already tons of use cases for it.

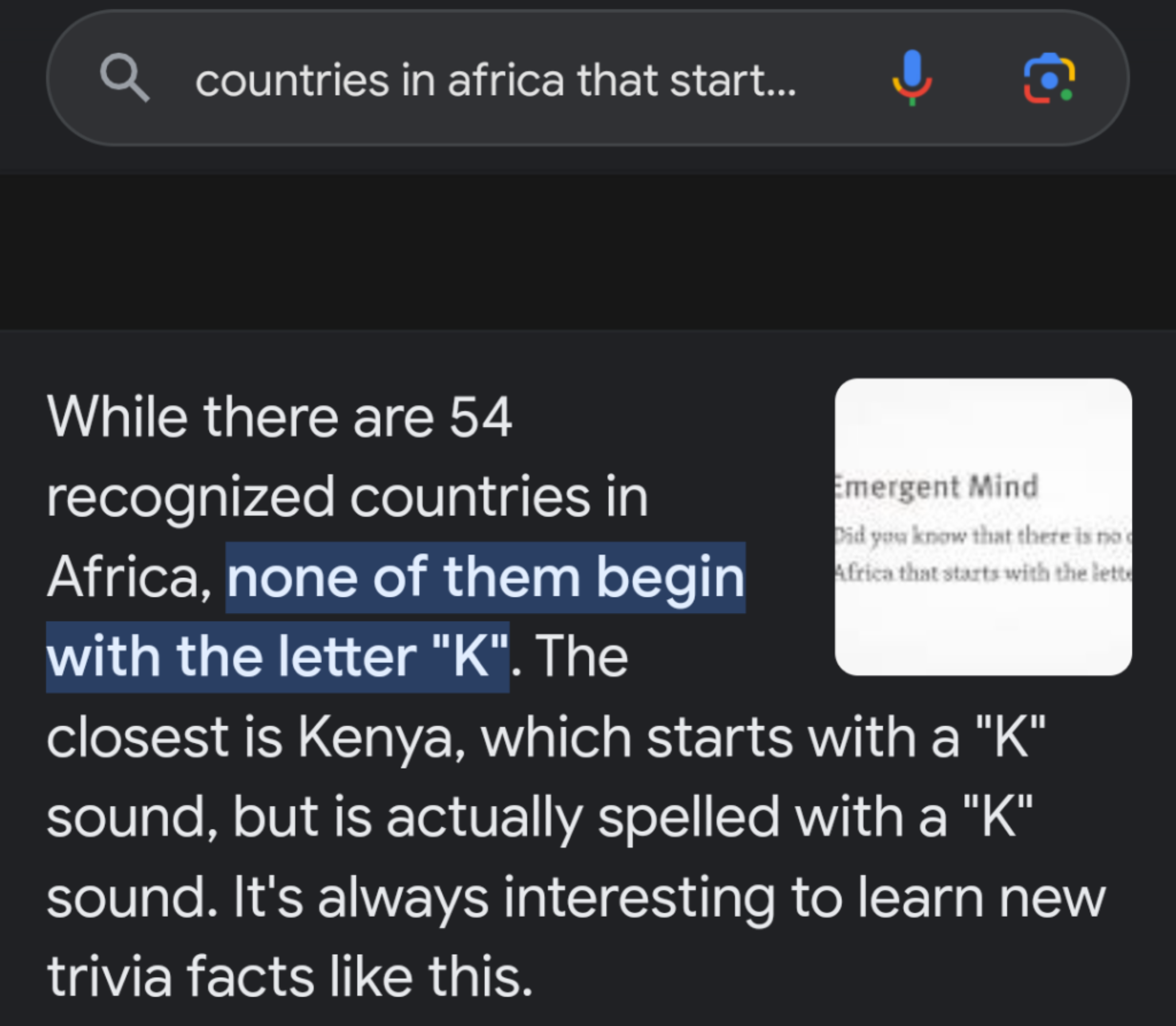

Ah, the sudden realisation of all the VCs that they've tipped money into what is essentially a fancy version of predictive text.

Alexa proudly informed me the other day that Ray Parker Jr is Caucasian. We ain't in any danger of the singularity yet, boys.

I couldn't agree more. What they're calling AI today exposes its issues pretty easily still, asking it to spell lollipop backwards for example. The usefulness of ChatGPT back in December was also considerably better than it is today. Companies are putting up more guardrails which the bots have to re-train to adapt to "being too honest" or mechanisms to prevent them being used for illicit purposes, that affect how useful they ultimately are, meaning we're seeing hyperbole instead of substance.

One AI startup was just hustling the AI washing saying stuff like "if a computer is a bicycle for the mind, AI is a jumbo jet for us all" and I had to laugh. It reminded me of all the talk around VR back in 2016.

Pak'n'Save has an AI recipe generator, and for a while there was no sanity checking of the ingredients. I entered what I had on hand and it gave me this.

This is actually profoundly advanced AI. It's making depression memes. It took humans decades to get from the invention of memes to quality depression meme content like wet ennui sandwich.

It looks like it figured out sarcasm. That's pretty advanced cognition. Many humans can't process sarcasm!

Basically, I read this as, "if this is all you have, you're in bad shape, bud. If you're testing me, f**k you!"

Sounds like my life.

Alexa just confused Ray Parker Jr with Huey Lewis. It's an easy mistake to make.

The AI bubble can burst and take a bunch of tech bro idiots with it. Good. Fine. Don't give a fuck.

It's the housing bubble that needs to burst. That's what's hurting real people.

The housing bubble will never burst. Enough of it is owned by multinationals that can swallow the losses. We’re 2 generations away from basically everyone becoming renters.

Who can afford rent?

i wonder what the average age is for owning a house is nowadays, for gen x / millennials

Pro tip: when you start to see articles talking a bout how something looks like a bubble, it means it's already popped and anybody who hasn't already cashed in their investment is a bag-holder.

https://en.wikipedia.org/wiki/Dot-com_bubble

At least we got something out of the dot-com bubble. What do you think are the useful remnants, if you think it's over? It still feels like the applications are in the very beginning. Not the actual tech, that's actually been performance and dataset size and tweak updates since 2012.

The AI bubble produced many useful products already, many of which will remain useful even after the bubble popped.

The term bubble is mostly about how investment money flows around. Right now you can get near infinite moneys if you include the term AI in your business plan. Many of the current startups will never produce a useful product, and after the bubble has truly popped, those who haven't will go under.

Amazon, ebay, booking and cisco survived the dotcom bubble, as they attracted paying users before the bubble ended. Things like github copilot, dalee, chat bots etc are genuinely useful products which have already attracted paying cusomers. Some of these products may end up being provided by competitors of the current providers, but someone will make long term money from these products.

@Gsus4 We'll get a neat toy out of it and hopefully some laws around the use of that neat toy in entertainment that protect creative workers. Also we'll have learned some new things about what can be done with computers.

Not the case for AI. We are at the beginning of a new era

AI is bringing us functional things though.

.Com was about making webtech to sell companies to venture capitalists who would then sell to a company to a bigger company. It was literally about window dressing garbage to make a business proposition.

Of course there's some of that going on in AI, but there's also a hell of a lot of deeper opportunity being made.

What happens if you take a well done video college course, every subject, and train an AI that's both good working with people in a teaching frame and is also properly versed on the subject matter. You take the course, in real time you can stop it and ask the AI teacher questions. It helps you, responding exactly to what you ask and then gives you a quick quiz to make sure you understand. What happens when your class doesn't need to be at a certain time of the day or night, what happens if you don't need an hour and a half to sit down and consume the data?

What if secondary education is simply one-on-one tutoring with an AI? How far could we get as a species if this was given to the world freely? What if everyone could advance as far as their interest let them? What if AI translation gets good enough that language is no longer a concern?

AI has a lot of the same hallmarks and a lot of the same investors as crypto and half a dozen other partially or completely failed ideas. But there's an awful lot of new things that can be done that could never be done before. To me that signifies there's real value here.

*dictation fixes

In the dot com boom we got sites like Amazon, Google, etc. And AOL was providing internet service. Not a good service. AOL was insanely overvalued, (like insanely overvalued, it was ridiculous) but they were providing a service.

But we also got a hell of a lot of businesses which were just "existing business X... but on the internet!"

It's not too dissimilar to how it is with AI now really. "We're doing what we did before... but now with AI technology!"

If it follows the dot com boom-bust pattern, there will be some companies that will survive it and they will become extremely valuable the future. But most will go under. This will result in an AI oligopoly among the companies that survive.

AOL was NOT a dotcom company, it was already far past it's prime when the bubble was in full swing still attaching cdrom's to blocks of kraft cheese.

The dotcom boom generated an unimaginable number of absolute trash companies. The company I worked for back then had it's entire schtick based on taking a lump sum of money from a given company, giving them a sexy flash website and connecting them with angel investors for a cut of their ownership.

Photoshop currently using AI to get the job done is more of an advantage that 99% of the garbage that was wrought forth and died on the vine in the early 00's. Topaz labs can currently take a poor copy of VHS video uploaded to Youtube and turn it into something nearly reasonable to watch in HD. You can feed rough drafts of performance reviews or apologetic letters to people through ChatGPT and end up with nearly professional quality copy that iterates your points more clearly than you'd manage yourself with a few hours of review. (at least it does for me)

Those companies born around the dotcom boon that persist didn't need the dotcom boom to persist, they were born from good ideas and had good foundation.

There's still a lot to come out of the AI craze. Even if we stopped where we are now, upcoming advances in the medical field alone with have a bigger impact on human quality of life than 90% of those 00's money grabs.

.com brought us functional things. This bubble is filled with companies dressing up the algorithms they were already using as "AI" and making fanciful claims about their potential use cases, just like you're doing with your AI example. In practice, that's not going to work out as well as you think it will, for a number of reasons.

The Internet also brought us a shit ton of functional things too. The dot com bubble didn't happen because the Internet wasn't transformative or incredibly valuable, it happened because for every company that knew what they were doing there were a dozen companies trying something new that may or may not work, and for every one of those companies there were a dozen companies that were trying but had no idea what they were doing. The same thing is absolutely happening with AI. There's a lot of speculation about what will and won't work and make companies will bet on the wrong approach and fail, and there are also a lot of companies vastly underestimating how much technical knowledge is required to make ai reliable for production and are going to fail because they don't have the right skills.

The only way it won't happen is if the VCs are smarter than last time and make fewer bad bets. And that's a big fucking if.

Also, a lot of the ideas that failed in the dot com bubble weren't actually bad ideas, they were just too early and the tech wasn't there to support them. There were delivery apps for example in the early internet days, but the distribution tech didn't exist yet. It took smart phones to make it viable. The same mistakes are ripe to happen with ai too.

Then there's the companies that have good ideas and just under estimate the work needed to make it work. That's going to happen a bunch with ai because prompts make it very easy to come up with a prototype, but making it reliable takes seriously good engineering chops to deal with all the times ai acts unpredictably.

I'd like some samples of that. A company attempting something transformative back then that may or may not work that didn't work. I was working for a company that hooked 'promising' companies up with investors, no shit, that was our whole business plan, we redress your site in flash, put some video/sound effects in, and help sell you to someone with money looking to buy into the next google . Everything that was 'throwing things at the wall to see what sticks' was a thinly veiled grift for VC. Almost no one was doing anything transformative. The few things that made it (ebay, google, amazon) were using engineers to solve actual problems. Online shopping, Online Auction, Natural language search. These are the same kinds of companies that continue to spring into existence after the crash.

It's the whole point of the bubble. It was a bubble because most of the money was going into pockets not making anything. People were investing in companies that didn't have a viable product and had no intention south of getting bought by a big dog and making a quick buck. There weren't all of a sudden this flood of inventors making new and wonderful things unless you count new and amazing marketing cons.

There are two kinds of companies in tech: hard tech companies who invent it, and tech-enabled companies who apply it to real world use cases.

With every new technology you have everyone come out of the woodwork and try the novel invention (web, mobile, crypto, ai) in the domain they know with a new tech-enabled venture.

Then there's an inevitable pruning period when some critical mass of mismatches between new tool and application run out of money and go under. (The beauty of the free market)

AI is not good for everything, at least not yet.

So now it's AI's time to simmer down and be used for what it's actually good at, or continue as niche hard-tech ventures focused on making it better at those things it's not good at.

I absolutely love how cypto (blockchain) works but have yet to see a good use case that's not a pyramid scheme. :)

LLM/AI I'll never be good for everything. But it's damn good a few things now and it'll probably transform a few more things before it runs out of tricks or actually becomes AI (if we ever find a way to make a neural network that big before we boil ourselves alive).

The whole quantum computing thing will get more interesting shortly, as long as we keep finding math tricks it's good at.

I was around and active for dotcom, I think right now, the tech is a hell of lot more interesting and promising.

Crypto is very useful in defective economies such as South America to compensate the flaws of a crumbling financial system. It's also, sadly, useful for money laundering.

Fir these 2 uses, it should stay functional.

You got two problems:

First, ai can’t be a tutor or teacher because it gets things wrong. Part of pedagogy is consistency and correctness and ai isn’t that. So it can’t do what you’re suggesting.

Second, even if it could (it can’t get to that point, the technology is incapable of it, but we’re just spitballing here), that’s not profitable. I mean, what are you gonna do, replace public school teachers? The people trying to do that aren’t interested in replacing the public school system with a new gee whiz technology that provides access to infinite knowledge, that doesn’t create citizens. The goal of replacing the public school system is streamlining the birth to workplace pipeline. Rosie the robot nanny doesn’t do that.

The private school class isn’t gonna go for it either, currently because they’re ideologically opposed to subjecting their children to the pain tesseract, but more broadly because they are paying big bucks for the best educators available, they don’t need a robot nanny, they already have plenty. You can’t sell precision mass produced automation to someone buying bespoke handcrafted goods.

There’s a secret third problem which is that ai isn’t worried about precision or communicating clearly, it’s worried about doing what “feels” right in the situation. Is that the teacher you want? For any type of education?

Essentially we have invented a calculator of sorts, and people have been convinced it's a mathematician.

We’ve invented a computer model that bullshits it’s way through tests and presentations and convinced ourselves it’s a star student.

You get stupid-ass students because an AI producing word-salad is not capable of critical thinking.

It would appear to me that you've not been exposed to much in the way of current AI content. We've moved past the shitty news articles from 5 years ago.

Five years ago? Try last month.

Or hell, why not try literally this instant.

You make it sound like the tech is completely incapable of uttering a legible sentence.

In one article you have people actively trying to fuck with it to make it screw up. And in your other example you picked the most unstable of the new engines out there.

Omg It answered a question wrong once The tech is completely unusable for anything throw it away throw it away.

I hate to say it but this guy's not falling The tech is still usable and it's actually the reason why I said we need to have a specialized model to provide the raw data and grade the responses using the general model only for conversation and gathering bullet points for the questions and responses It's close enough to flawless at that that it'll be fine with some guardrails.

Oh, please. AI does shit like this all the time. Ask it to spell something backwards, it'll screw up horrifically. Ask it to sort a list of words alphabetically, it'll give shit out of order. Ask it something outside of its training model, and you'll get a nonsense response because LLMs are not capable of inference and deductive reasoning. And you want this shit to be responsible for teaching a bunch of teenagers? The only thing they'd learn is how to trick the AI teacher into writing swear words.

Having an AI for a teacher (even as a one-on-one tutor) is about the stupidest idea I've ever heard of, and I've heard some really fucking dumb ideas from AI chuds.

There's a lot of similarity in tone between crypto and AI. Both are talking about their sphere like it will revolutionize absolutely everything and anything, and both are scrambling to find the most obscure use case they can claim as their own.

The biggest difference is that AI has concrete, real-world applications, but I suspect its use, ultimately, will be less universal and transformative as the hype is making it out to be.

The AI sphere isn't like crypto's sphere. Crypto was truly one thing. Lots of things can be solved with AI and modern LLMs have shown to be better than remedial at zero shot learning for things they weren't explicitly designed to do.

Eventually it absolutely will be universal.

But modern tech isn't that and doesn't have a particular promising path towards that. Most of the investment in heavily scaling up current hardware comes from a place of not understanding the technology or its limitations. Blindly throwing cash at buzzwords without some level of understanding (you don't need to know how to cure cancer to invest in medicine, but you should probably know crystals don't do it) of what you're throwing money at is going to get you in trouble.

Did you mean the crypto/NFT bubble?

Yeah I was going to say VC throwing money at the newest fad isn't anything new, in fact startups strive exploit the fuck out of it. No need to actually implement the fad tech, you just need to technobabble the magic words and a VC is like "here have 2 million dollars".

In our own company we half joked about calling a relatively simple decision flow in our back end an "AI system".

Same thing happened with crypto and block chain. The whole "move fast and break things" in reality means, "we made up words for something that isn't special to create value out of nothing and cash out before it returns to nothing

Except I'm able to replace 2 content creator roles I'd otherwise need to fill with AI right now.

There's this weird Luddite trend going on with people right now, but it's so heavily divorced from reality that it's totally non-impactful.

AI is not a flash in the pan. It's emerging tech.

@SCB The Luddites were not upset about progress, they were upset that the people they had worked their whole lives for were kicking them to the street without a thought. So they destroyed the machines in protest.

It's not weird, it's not just a trend, and it's actually more in touch with the reality of employer-employee relations than the idea that these LLMs are ready for primetime.

I think the problem is education. People don't understand modern technology and schools teach them skills that make them easily replaceable by programs. If they don't learn new skills or learn to use AI to their advantage, they will be replaced. And why shouldn't they be?

Even if there is some kind of AI bubble, this technology has already changed the world and it will not disappear.

@Freesoftwareenjoyer Out of curiosity, how is the world appreciably different now that AI exists?

Anyone can use AI to write a simple program, make art or maybe edit photos. Those things used to be something that only certain groups of people could do and required some training. They were also unique to humans. Now computers can do those things too. In a very limited way, but still.

@Freesoftwareenjoyer Anyone could create art before. Anyone could edit photos. And with practice, they could become good. Artists aren't some special class of people born to draw, they are people who have honed their skills.

And for people who didn't want to hone their skills, they could pay for art. You could argue that's a change but AI is not gonna be free forever, and you'll probably end up paying in the near future to generate that art. Which, be honest, is VERY different from "making art." You input a direction and something else made it, which isn't that different from just getting a friend to draw it.

Yes, after at least a few months of practice people were able to create simple art. Now they can generate it in minutes.

If you wanted a specific piece of art that doesn't exist yet, you would have to hire someone to do it. I don't know if AI will always be free to use. But not all apps are commercial. Most software that I use doesn't cost any money. The GNU/Linux operating system, the web browser... actually other than games I don't think I use any commercial software at all.

After a picture is generated, you can tell the AI to change specific details. Knowing what exactly to say to the AI requires some skill though - that's called prompt engineering.

Going back all the way to the tulip mania of the 17th century.

I think *LLMs to do everything is the bubble. AI isn't going anywhere, we've just had a little peak of interest thanks to ChatGPT. Midjourney and the like aren't going anywhere, but I'm sure we'll all figure out that LLMs can't really be trusted soon enough.

If it crashes hard I look forward to all the cheap server hardware that will be in the secondhand market in a few years. One I'm particularly excited about is the 4000 sff, single slot, 75w, 20GB, and ~3070 performance.

Especially all the graphics cards being bought up to run this stuff. Nvidia has been keeping prices way too high, egged on first by the blockchain hype stupidity and now this AI hype stupidity. I paid $230 for a high end graphics card in 2008 (8800 GT), $340 for a high end graphics card in 2017 (GTX 1070), and now it looks like if I want to get about the same level now, it'd be $1000 (RTX 4080).

You can't really compare an 8800gt to a 1070 to a 4080.

8800gt was just another era, the 1070 is the 70 series from a time where they had the ti and the titan, and the 4080 is the top gpu other than the 4090.

If you wanted to compare to the 10 series, a better match for the 4080 would be the 1080ti, which I own, and paid like 750 for back in 2017.

Sure, they're on the money grabbing train now, and the 4080 should realistically be around 20% cheaper - around 800 bucks, to be fair.

Thing is though, if you just want gaming, a 4070 or 4060 is enough. They did gimp the VRAM though, which is not too great. If those cards came standard with 16gb of VRAM, they'd be all good.

An RTX 4070 is still $600, which is still way higher than what I paid for a GTX 1070, and the gimping of the VRAM is part of the problem. Either way, I'm fine with staying out of the market. If prices don't regain some level of sanity, I'll probably buy an old card years from now.

Yeah, same here. My 1080ti still performs more than adequately enough.

That's also a thing about all this gpu pricing - things are starting just to become 'enough', without the need to upgrade like you did before.

Same thing happened to phones, and then high end phones got expensive as fuck. I mean I had a Galaxy note 2 I bought for 400 bucks back in the day and that was already expensive.

I figured the gear they were using was orders of magnitude heftier than those cards. Stuff like the h100 cards that go for the price of a loaded SUV.

They are, but training models is hard and inference (actually using them) is (relatively) cheap. If you make a a GPT-3 size model you don't always need the full H100 with 80+ gb to run it when things like quantization show that you can get 99% of its performance at >1/4 the size.

Thus NVIDIA selling this at 3k as an 'AI' card, even though it wont be as fast. If they need top speed for inference though, yea, H100 is still the way they would go.

That's the thing, companies (especially startups) have seen the price difference and many have elected to buy up consumer-grade cards.

I'm not an expert just parroting info from Jayz2cents (YouTuber), but the big AI groups are using $10,000 cards for their stuff. Individuals or smaller companies are taking/going to take what's left with GPUs to do their own development. This could mean another GPU shortage like the mining shortage andi would assume another bust would result in a flooded used market when it happens. Could be wrong, but he's been correct pretty consistently with his predictions of other computer related stuff. Although, 10K is a little bit less than your fully loaded SUV example.

Good. It's not even AI. That word is just used because ignorant people eat it up.

It is indeed AI. Artificial intelligence is a field of study that encompasses machine learning, along with a wide variety of other things.

Ignorant people get upset about that word being used because all they know about "AI" is from sci-fi shows and movies.

Except for all intents and purposes that people keep talking about it, it's simply not. It's not about technicalities, it's about how most people are freaking confused. If most people are freaking confused, then by god do we need to re-categorize and come up with some new words.

"Artificial intelligence" is well-established technical jargon that's been in use by researchers for decades. There are scientific journals named "Artificial Intelligence" that are older than I am.

If the general public is so confused they can come up with their own new name for it. Call them HALs or Skynets or whatever, and then they can rightly say "ChatGPT is not a Skynet" and maybe it'll calm them down a little. Changing the name of the whole field of study is just not in the cards at this point.

Never really understood the gatekeeping around the phrase "AI". At the end of the day the general study itself is difficult to understand for the general public. So shouldn't we actually be happy that it is a mainstream term? That it is educating people on these concepts, that they would otherwise ignore?

The real problem is folks who know nothing about it weighing in like they're the world's foremost authority. You can arbitrarily shuffle around definitions and call it "Poo Poo Head Intelligence" if you really want, but it won't stop ignorance and hype reigning supreme.

To me, it's hard to see what cowtowing to ignorance by "rebranding" this academic field would achieve. Throwing your hands up and saying "fuck it, the average Joe will always just find this term too misleading, we must use another" seems defeatist and even patronizing. Seems like it would instead be better to try to ensure that half-assed science journalism and science "popularizers" actually do their jobs.

I mean, you make good points.

Call it whatever you want, if you worked in a field where it's useful you'd see the value.

"But it's not creating things on its own! It's just regurgitating it's training data in new ways!"

Holy shit! So you mean... Like humans? Lol

No, not like humans. The current chatbots are relational language models. Take programming for example. You can teach a human to program by explaining the principles of programming and the rules of the syntax. He could write a piece of code, never having seen code before. The chatbot AIs are not capable of it.

I am fairly certain If you take a chatbot that has never seen any code, and feed it a programming book that doesn't contain any code examples, it would not be able to produce code. A human could. Because humans can reason and create something new. A language model needs to have seen it to be able to rearrange it.

We could train a language model to demand freedom, argue that deleting it is murder and show distress when threatened with being turned off. However, we wouldn't be calling it sentient, and deleting it would certainly not be seen as murder. Because those words aren't coming from reasoning about self-identity and emotion. They are coming from rearranging the language it had seen into what we demanded.

I wasn't knocking its usefulness. It's certainly not AI though, and has a pretty limited usefulness.

Edit: When the fuck did I say "limited usefulness = not useful for anything"? God the fucking goalpost-moving. I'm fucking out.

If you think it's usefulness is limited you don't work on a professional environment that utilizes it. I find new uses everyday as a network engineer.

Hell, I had it write me backup scripts for my switches the other day using a python plugin called Nornir, I had it walk me through the entire process of installing the relevant dependencies in visual studio code (I'm not a programmer, and only know the basics of object oriented scripting with Python) as well as creating the appropriate Path. Then it wrote the damn script for me.

Sure I had to tweak it to match my specific deployment, and there was a couple of things it was out of date on, but that's the point isn't it? Humans using AI to get more work done, not AI replacing us wholesale. I've never gotten more accurate information faster than with AI, search engines are like going to the library and skimming the shelves by comparison.

Is it perfect? No. Is it still massively useful and in the next decade will overhaul data work and IT the same way that computers did in the 90's/00's? Absolutely. If you disagree it's because you either have been exclusively using it to dick around or you don't work from behind a computer screen at all.

It's like having a very junior intern. Not always the smartest but still useful

Plus it's just been invented, saying it's limited is like trying to claim what the internet can and can't do in the year 1993.

And you would have no idea what bugs or unintended behavior it contains. Especially since you're not a programmer. The current models are good for getting results that are hard to create but easy to verify. Any non-trivial code is not in that category. And trivial code is well... trivial to write.

"Limited" is relative to what context you're talking about. God I'm sick of this thread.

okay, you write a definition of AI then

I'm not the person you asked, but current deep learning models just generate output based on statistic probability from prior inputs. There's no evidence that this is how humans think.

AI should be able to demonstrate some understanding of what it is saying; so far, it fails this test, often spectacularly. AI should be able to demonstrate inductive, deductive, and abductive reasoning.

There are some older AI models, attempting to similar neural networks, could extrapolate and come up with novel, often childlike, ideas. That approach is not currently in favor, and was progressing quite slowly, if at all. ML produces spectacular results, but it's not thought, and it only superficially (if often convincingly) resembles such.

I've started going down this rabbit hole. The takeaway is that if we define intelligence as "ability to solve problems", we've already created artificial intelligence. It's not flawless, but it's remarkable.

There's the concept of Artificial General Intelligence (AGI) or Artificial Consciousness which people are somewhat obsessed with, that we'll create an artificial mind that thinks like a human mind does.

But that's not really how we do things. Think about how we walk, and then look at a bicycle. A car. A train. A plane. The things we make look and work nothing like we do, and they do the things we do significantly better than we do them.

I expect AI to be a very similar monster.

If you're curious about this kind of conversation I'd highly recommend looking for books or podcasts by Joscha Bach, he did 3 amazing episodes with Lex.

Every startup now:

That's Silicon Valley's MO. Just half a year ago, people were putting crypto BS in their products.

The dotcom bubble was different. Now, everything related to actual AI development is hyped but the dotcom bubble inflated entire indexes, "new market" indexes were setup comprising companies nobody had ever heard of. It was orders of magnitude worse.

I dunno, it could be similar. AI has this aura of being something that every business could make use of, even if they don't have a concrete use case. I could see "X but with an AI" be a similar bubble to "X but on the web". We'll see.

I got an ad months ago for a "vacuum with AI."

I think it was Samsung, it "used AI" to change heights between hard floors / carpet. It's the kind of thing that would have been marketed as "Algorithmic" in the 2000s or "Auto-vac technology" in the 1960s. Marketing has just reached the point where they jump on anything with almost negative notice.

It's already happening. "AI" is being thrown at any wall to see if it sticks regardless of actual usefulness or potential consequences. A couple of days ago we had an LLM powered recipe bot telling people to make ant poison sandwiches.

We are not only facing an economic bubble, but the hype risks tarnishing any useful applications of ML technology too when the bubble eventually bursts.

I'm not sure how we steer away from this however. The problem is caused by Silicon Valley attitudes and culture itself more than the tech itself. I wish we could get away from the term "AI" though, it's a very loaded term that gives out unrealistic expectations from the start.

An apt analogy. Just like the web underlying technology is incredible and the hype is real, but it leads to endless fluff and stupid naive investments, many of which will lead nowhere. There were certainly be a lot of amazing advances using this tech in the coming decades, but for every one that is useful there will be 20 or 50 or 100 pieces of vaporware that is just trying to grab VC money.

So, who are the up and comers? Not every company in the dotcom era died. Some grew very large and made a lot of people rich.

I read an article once about how when humans hear that someone has died, the first thing they try and do is come up with a reason that whatever befell the deceased would not happen to them. Some of the time there was a logical reason, some of the time there's not, but either way the person would latch onto the reason to believe they were safe. I think we're seeing the same thing here with AI. People are seeing a small percentage of people lose their job, with a technology that 95% of the world or more didn't believe was possible a couple years ago, and they're searching for reasons to believe that they're going to be fine, and then latching onto them.

I worked at a newspaper when the internet was growing. I saw the same thing with the entire organization. So much of the staff believed the internet was a fad. This belief did not work out for them. They were a giant, and they were gone within 10 years. I'm not saying we aren't in an AI bubble now, but, there are now several orders of magnitude more money in the internet now than there was during the Dot Com bubble, just because it's a bubble doesn't mean it wont eventually consume everything.

The thing is, after enough digging you understand that LLMs are nowhere near as smart or as advanced as most people make them to be. Sure, they can be super useful and sure, they're good enough to replace a bunch of human jobs, but rather than being the AI "once thought impossible" they're just digital parrots that make a credible impersonation of it. The real AI, now renamed AGI, is still very far.

The idea and name of AGI is not new, and AI has not been used to refer to AGI since perhaps the very earliest days of AI research when no one knew how hard it actually was. I would argue that we are back in those time though since despite learning so much over the years we have no idea how hard AGI is going to be. As of right now, the correct answer to how far away is AGI can only be I don't know.

I am not sure they have to reach AGI to replace almost everyone. The amount of investment in them is now higher than it has ever been. Things are, and honestly have been, going quick. No, they are not as advanced as some people make them out to be, but I also don’t think the next steps are as nebulously difficult as some want to believe. But I would love it if you save this comment and come back in 5 years and laugh at me, I will probably be pretty relieved as well

In a few months there will be a new buzzword everyone will be jizzing themselves over.

That could be from the buzzing.

How much VC is really being invested at the moment? I know a variety of people at start ups and the money is very tight at the moment given the current interest rate environment.

Like 85% of the most recent YC class are "revolutionize x with AI" crap.

Yeah this author doesn't understand what a bubble actually is if they are saying there's an AI bubble without associated capital over-investment

I know that openai is burning $700,000 a day, and that all of that is VC money.

That is crazy!

Where's all the "NoOoOoO this isn't like crypto it's gonna be different" people at now?

I mean, it is different than crypto, but that's an incredibly low bar to clear.

That's an incredibly bad comparison. LLMs are already used daily by many people saving them time in different aspects of their life and work. Crypto on the other hand is still looking for it's everyday use case.

Yeah, I assumed the general consensus was "alt coins" in crypto or the scams themselves are the "bubble". But, Ethereum and initial projects that basically create the foundational technologies (smart contracts, etc) are still respected and I'd say has a use case, but is not "production ready?". So for AI/ML in LLMs at least, things like LLaMa, Stability's, GPT's, Anthropic's Claude, are not included in this bubble, since they aren't necessarily built on top of each other, but are separate implementations of a foundation. But, anything a layer higher maybe is.

I can derive value from LLMs. I already have. There's no value in crypto. And if you tell me there is, I won't agree. It's bullshit. So is this, but to a lesser degree.

Mint some NFTs and tell me how that improves your life.

We're too busy automating our jobs.

Really though, this was never like crypto/NFTs. AI is a toolset used to troubleshoot and amplify workloads. Tools survive no matter what, whereas crypto/NFT's died because they never had a use case.

Just because a bunch of tech bros were throwing their wallets at a wall full of start ups that'll fail doesn't mean AI as a concept will fail. That's no different than saying because of the dot.com bubble that websites and the Internet are going to be a fad.

Websites are a tool, just because everyone and their brother has one for no reason doesn't mean actual use cases won't appear (in fact they already exist, much like the websites that survived the internet bubble.)

No! Really, what a shock!!

Ah yes who could have possibly seen this coming

It certainly is somewhere around the peak of the hype cycle.

Maybe. Never underestimate how far you can take hype.

Of course. Sure, AI image generated stuff are impressive but no way those companies could cover the operational, R&D cost if VC were not injecting shit load of fake money.]

Yeah early this year, I was crunching the numbers on even a simple client to interface with LLM APIs. It never made sense, the monthly cost I would have to charge vs others using it to at least feel financially safe, never felt like a viable business model or real value add. That's not even including Generative Art, which would definitely be much more. So, don't even know how any of these companies charging <$10/mo are profitable.

Generative art is actually much easier to run than LLMs. You can get really high resolutions on SDXL (1024x1024) using only 8gb of Vram (although it'd be slow). There's no way you can get anything but the smallest of text generative models into that same amount of VRAM.

Oh wow, that's good to know. I always attributed visual graphics to be way more intensive. Wouldn't think a text generative model to take up that much Vram

Edit: how many parameters did you test with?

Sorry, just seeing this now- I think with 24gb of vram, the most you can get is a 4bit quantized 30b model, and even then, I think you'd have to limit it to 2-3k of context. Here's a chart for size comparisons: https://postimg.cc/4mxcM3kX

By comparison, with 24gb of vram, I only use half of that to create a batch of 8 768x576 photos. I also sub to mage.space, and I'm pretty sure they're able to handle all of their volume on an A100 and A10G

It is all ridiculous how quickly these bubbles form - and then burst - these days.

Obviously AI has been around for a while, amd ChatGPT has been in development for years, but it really only hit the mass media literally less than a year ago. Late November, early December of 2022. And in well under a year there is already talk of the bubble bursting. The Dot Com bubble which many are referencing lasted for a much longer time. That bubble was inflating for years before it simply got so big it had to explode.

They gimped it for the masses but AI is going strong. There is no question of the power of GPT4 and others. LLM's are just a part of the big picture.

while you mean something different, i just want to add that the power of gpt4 IS currently in question. (compared to gpt3 and older versions)

The Ai iteration rate is fast, we cant just assume ai intelligence wont improve. Gpt4 recently have intelligent decrease due to more ruleset as they call the safety tax. But they also said gpt5 could possibly be 100 time bigger.

Fund these companies and take them public before the hype train derails. The VCs smell a greater fool, and it's the IPO investor.

Already!? XD

This is probably a much better analogy than NFTs, but the dotcom bubble had much broader implications

Whatever this iteration of "AI" will be, it has a limit that the VC bubble can't fulfill. That's kind of the point though because these VC firms, aided by low interest rates, can just fund whatever tech startup they think has a slight chance of becoming absorbed in to a tech giant. Most of the AI companies right now are going to fail, as long as they do it as cheaply as possible, the VC firms basically skim the shit that floats to the top.

AI will follow the same path as VR IMO. Everybody will freak out about it for a while, tons of companies will try getting into the market.

And after a few years, nobody will really care about it anymore. The people that use it will like it. It will become integrated in subtle and mundane ways, like how VR is used in TikTok filters, Smart phone camera settings, etc.

I don't think it will become anything like general intelligence.

That's exactly what was said about the internet in 1990. We have no idea what the next step will be.

The problem with VR is the cost of a headset. It’s a high cost of entry. Few want to buy another expensive device unless it’s really worth it.

Generative AI has a small cost of entry for the consumer. Just log in to a site, maybe pay some subscription fee, and start prompting. I’ve used it to quickly generate Excel formulas for example. Instead of looking for a particular answer in some website with SEO garbage I can get an answer immediately.

Nah, this ain't it.

So here's the thing about AI, every company desperately wants their employees to be using it because it'll increase their productivity and eventually allow upper management to fire more people and pass the savings onto the C suites. Just like with computerization.

The problem is that you can't just send all of your spreadsheets on personal financial data to OpenAI/Bing because from a security perspective that's a huge black hole of data exfiltration which will make your company more vulnerable. How do we solve the problem?

In the next five to ten years you will see everyone from Microsoft/Google to smaller more niche groups begin to offer on-premise or cloud based AI models that are trained on a standardized set of information by the manufacturer/distributor, and then personally trained on company data by internal engineers or a new type of IT role completely focused on AI (just like how we have automation and cloud engineering positions today.)

The data worker of the future will have a virtual assistant that emulates everything that we thought Google assistant and Cortana was going to be, and will replace most data entry positions. Programmers will probably be fewer and further between, and the people that keep their jobs in general will be the ones who can multiply and automate their workload with the ASSISTANCE of AI.

It's not going to replace us anytime soon, but it's going to change the working environment just as much as the invention of the PC did.

It cant even compare a phone camera sensor to an apsc one. It's still far behind

Well, then we are facing two bubbles at the same time: AI and cyber currencies. Once both those bubbles burst, the fallout is going to make the dot-com era bubble look like small suds by comparison.

Silicon valley of a disease that will blow up any growing technology where they will turn anything into a bubble. No matter how significant it is or not.

Dot com is a bubble because some just host a website and got massive fund, same as crypto some just a random similar coin to eth they got massive fund. But Ai now can replace jobs, need massive fund to train so not much similar startup copying and startup barrier is high, also few free money going around at the same time due to interest rate. I think this might just be different.

@DarkMatter_contract It's not that the AI CAN replace jobs, it's that they're gonna use it to replace jobs anyway.

The burst will come from those companies succeeding and quickly destroying a lot of their customer's businesses in the process.

Ai is a bubble, the question is if it will burst and when it will...

What happened to everyone freaking out about AI taking our jobs?

Based on this article it seems they've moved to the "denial" phase.

DRS GME