Outsourcing emotion: The horror of Google’s “Dear Sydney” AI ad | The company suggests using AI to write a child’s fan letter and the ad is so bad that Google turned off comments for it on YouTube

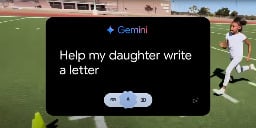

If you've watched any Olympics coverage this week, you've likely been confronted with an ad for Google's Gemini AI called "Dear Sydney." In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone.

"I'm pretty good with words, but this has to be just right," the father intones before asking Gemini to "Help my daughter write a letter telling Sydney how inspiring she is..." Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be "just like you."

I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, "Dear Sydney" presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

Inserting Gemini into a child's heartfelt request for parental help makes it seem like the parent in question is offloading their responsibilities to a computer in the coldest, most sterile way possible. More than that, it comes across as an attempt to avoid an opportunity to bond with a child over a shared interest in a creative way.

This is one of the weirdest of several weird things about the people who are marketing AI right now

I went to ChatGPT right now and one of the auto prompts it has is “Message to comfort a friend”

If I was in some sort of distress and someone sent me a comforting message and I later found out they had ChatGPT write the message for them I think I would abandon the friendship as a pointless endeavor

What world do these people live in where they’re like “I wish AI would write meaningful messages to my friends for me, so I didn’t have to”

The thing they're trying to market is a lot of people genuinely don't know what to say at certain times. Instead of replacing an emotional activity, its meant to be used when you literally can't do it but need to.

Obviously that's not the way it should go, but it is an actual problem they're trying to talk to. I had a friend feel real down in high school because his parents didn't attend an award ceremony, and I couldn't help cause I just didn't know what to say. AI could've hypothetically given me a rough draft or inspiration. Obviously I wouldn't have just texted what the AI said, but it could've gotten me past the part I was stuck on.

In my experience, AI is shit at that anyway. 9 times out of 10 when I ask it anything even remotely deep it restates the problem like "I'm sorry to hear your parents couldn't make it". AI can't really solve the problem google wants it to, and I'm honestly glad it can't.

They're trying to market emotion because emotion sells.

It's also exactly what AI should be kept away from.

But ai also lies and hallucinates, so you can’t market it for writing work documents. That could get people fired.

Really though, I wonder if the marketing was already outsourced to the LLM?

Sadly, after working in Advertising for over 10 years, I know how dumb the art directors can be about messaging like this. It why I got out.

A lot of the times when you don't know what to say, it's not because you can't find the right words, but the right words simply don't exist. There's nothing that captures your sorrow for the person.

Funny enough, the right thing to say is that you don't know what to say. And just offer yourself to be there for them.

Yeah. If it had any empathy this would be a good task and a genuinely helpful thing. As it is, it’s going to produce nothing but pain and confusion and false hope if turned loose on this task.

The article makes a mention of the early part of the movie Her, where he's writing a heartfelt, personal card that turns out to be his job, writing from one stranger to another. That reference was exactly on target: I think most of us thought outsourcing such a thing was a completely bizarre idea, and it is. It's maybe even worse if you're not even outsourcing to someone with emotions but to an AI.

You're in luck, you can subscribe to an AI friend instead. ~/s~

You’ve seen porn addiction yes, but have you seen AI boyfriend emotional attachment addiction?

Guaranteed to ruin your life! Act now.

Don’t date robots!

Brought to you by the Space Pope.

Shut up! You don't understand what me and Marilyn Monrobot have together!

Those AI dating sites always have the creepiest uncanny valley profile photos. Its fun to scroll them sometimes.

Uhh "subscribing to an AI friend" is technically possible in the form of character.ai sub. Not that I recommend it but in this day your statement is not sarcastic.

These seem like people who treat relationships like a game or an obligation instead of really wanting to know the person.

it's completely tone-deaf of that's their understanding of humanity. Similar to the ipad app ad that theluddite blog refered to here in his post about capture platforms. Making art, music and human emotional connections are not the tedious part that need to be automated away ffs

My initial response is the same as yours, but I wonder... If the intent was to comfort you and the effect was to comfort you, wasn't the message effective? How is it different from using a cell phone to get a reminder about a friend's birthday rather than memorizing when the birthday is?

One problem that both the AI message and the birthday reminder have is that they don't require much effort. People apparently appreciate having effort expended on their behalf even if it doesn't create any useful result. This is why I'm currently making a two-hour round trip to bring a birthday cake to my friend instead of simply telling her to pick the one she wants, have it delivered, and bill me. (She has covid so we can't celebrate together.) I did make the mistake of telling my friend that I had a reminder in my phone for this, so now she knows I didn't expend the effort to memorize the date.

Another problem that only the AI message has is that it doesn't contain information that the receiver wants to know, which is the specific mental state of the sender rather than just the presence of an intent to comfort. Presumably if the receiver wanted a message from an AI, she would have asked the AI for it herself.

Anyway, those are my Asperger's musings. The next time a friend needs comforting, I will tell her "I wish you well. Ask an AI for inspirational messages appropriate for these circumstances."

I don't think the recipient wants to know the specific mental state of the sender. Presumably, the person is already dealing with a lot, and it's unlikely they're spending much time wondering what friends not going through it are thinking about. Grief and stress tend to be kind of self-centering that way.

The intent to comfort is the important part. That's why the suggestion of "I don't know what to say, but I'm here for you" can actually be an effective thing to say in these situations.

Don't need to ask an AI when every website is AI-generated blogspam these days

This is the problem I've had with the LLM announcements when they first came out. One of their favorite examples is writing a Thank You note.

The whole point of a Thank You note is that you didn't have to write it, but you took time out of your day anyways to find your own words to thank someone.

Sincerity is a foreign concept to MBAs, VCs, and anyone who thinks they're on a business Grind Set. They view the world as a game and interpersonal relationships as a game mechanic.

Ugh, who has time for that? I need all of my waking hours to be devoted to increasing work productivity and consuming products. Computers can feel my pesky feelings for me now.

Companies like Google don't understand how advanced AI algorithms work. They can sort of represent things like emotions by encoding relationships between high level concepts and trying to relate things together using logic.

This usually just means they'll echo the emotions of whomever gave them input and amplify them to make some form of art, though.

People with power at Google are often very hateful people who will say hurtful things to each other, especially about concepts like money or death.

Although I will use it to write resumes and cover letters when applying to jobs from now on. They use AI to weed out resumes. I figure the only way to beat that system is to use it against itself.

As an engineering manager, I've already received AI cover letters. Don't do that. They suck. They get "round filed" faster than no cover letter at all. It's insulting.

(Realistically if I couldn't tell the difference then it would be fine, but right now it's so fucking obvious.)

But apparently you're not using AI to filter the resumes. A huge number of companies are. 42% as of this year.

https://www.bbc.com/worklife/article/20240214-ai-recruiting-hiring-software-bias-discrimination

Robot, experience this dramatic irony for me!

reminds me of that bear from inside job

leans back and sips beer

Glad to see others have also keyed in on just how lame this ad was.

My immediate thought was, if you (the guy doing the voiceover as the father) are so mentally deficient that you can't even put together a four sentence paragraph of your own original thoughts for fanmail, then what hope do you have of doing anything else as a functioning adult?

Worse yet, what does this teach the kid?

It should be like a core memory for the kid to do this with her dad. It's like having an LLM to play catch or do tea parties with her.

Simpso... erm I mean Futurama did it!

It teaches the kid to rely more and more on AI for everything, just like Google wants.

They're already 'thanking' siri and alexa, this will be a very dangerous development.

Roko's basilisk is kind of bullshit but the meme is funny.

rokos basilisk is the most stupidest thing and I hate it so much. it's so obviously just plain wrong. it's just wrong. it's not even an interpretation thing. most stupidest and insane and useless idea ever.

edit: I'm still mad at that one YouTuber that did a video about rokos basilisk pretending it made even a little bit of sense.

It's creepypasta for edgelad nerds

Thanking a personified character doesn’t strike me as a bad thing.

Surely theres a more positive perspective where people are just naturally polite in their words and would struggle to communicate differently to a language bot.

It's pretty frustrating how the venn diagram of 'people who treat people like things', and 'people who treat things like people' is a near circle.

You… you joke, but I know a few parents who would absolutely fail at something like this. Hell, they fail at basic math, and are barely literate.

I’m not saying this is a great idea for everyone, or that the ad is good. But the idea that “no one needs this” is extremely short sighted. For god sakes, the literacy rate in America alone isn’t even 95%, and over 50% of Americans aren’t proficient in English.

Again. This ad sucks for lots of reasons. But don’t pretend idiots can’t make it through adulthood, never mind become parents. The idiots are usually the ones with the most kids.

AI systems are still a useful tool.

I wonder what would happen if the world found out that Sydney McLaughlin-Levrone (or any other celebrity/athlete/role model) was using Chat GPT to respond to fan mail. My gut feeling is that people would find it disingenuous at best -- and there would probably be significant outrage.

Where's the AI that does my dishes and cleans my house so I have more time to write, create, and connect with others? That's the technology I want -- not one that does the meaningful part and leaves the menial stuff up to me.

Wow, this is an unfair take and very judgemental. I can think of a dozen reasons why an adult might have trouble writing a letter aside from being "mentally deficient." Dyslexia, anxiety, poor education, not being a native speaker, ADHD, etc.

Trust me, I thought the ad was lame and a bleak use case for AI, but you don't have to crucify a parent for doing their best to help their kid.

That "etc." certainly includes living in an anti-intellectual society full of emotionally stunted people who learned that men shouldn't care about feelings and that reading is for dorks.

Totally agree.

lists mental deficiencies

So in the spring I got a letter from a student telling me how much they appreciate me as a teacher. At the time I was going through some s***. Still am frankly. So it meant a lot to me.That was such a nice letter.

I read it again the next day and realized it was too perfect. Some of the phrasing just didn't make sense for a high school student. Some of the punctuation.

I have no doubt the student was sincere in their appreciation for me, But once I realized what they had done It cheapened those happy feelings. Blah.

You should've asked Gemini what to feel about it and how to response...

That's the problem with how they are doing it, everyone seems to want AI to do everything, everywhere.

It is now getting on my own nerves, because more and more customers want to have somehow AI integrated in their websites, even when they don't have a use for it.

We created a society of antisocial people who are maximized as efficient working machines to the point of drugging the ones that are struggling with it.

Of course they want AI to do it for them and end human interactions. It's simpler that way.

I’m curious, if they had gone to their parent, gave them the same info, and come to the same message… would it have been less cheap feeling?

And do you know that isn’t the case? “Hey mom, I’m trying to write something nice to my teacher, this is what I have but it feels weird can you make a suggestion?” Is a perfectly reasonable thing to have happened.

I think there's a different amount of effort involved in the two scenarios and that does matter. In your example, the kid has already drafted the letter and adding in a parent will make it take longer and involve more effort. I think the assumption is they didn't go to AI with a draft letter but had it spit one out with a much easier to create prompt.

... But why did it cheapen it when they're the one that sent it to you? Because someone helped them write it, somehow the meaning is meaningless?

That seems positively callous in the worst possible way.

It's needless fear mongering because it doesn't count because of arbitrary reason since it's not how we used to do things in the good old days.

No encyclopedia references... No using the internet... No using Wikipedia... No quoting since language and experience isn't somehow shared and built on the shoulders of the previous generations with LLMs being the equivalent of a literal human reference dictionary that people want to say but can't recall themselves or simply want to save time in a world where time is more precious than almost anything lol.

The only reason anyone shouldn't like AI is due to the power draw. And nearly every AI company is investing more in renewables than anyone everyone else while pretending like data centers are the bane of existence while they write on Lemmy watching YouTube and playing an online game lol.

David Joyner in his article On Artificial Intelligence and Authenticity gives an excellent example on how AI can cheapen the meaning of the gift: the thought and effort that goes into it.

The assistant parallel is an interesting one, and I think that comes out in how I use LLMs as well. I’d never ask an assistant to both choose and get a present for someone; but I could see myself asking them to buy a gift I’d chosen. Or maybe even do some research on a particular kind of gift (as an example, looking through my gift ideas list I have “lightweight step stool” for a family member. I’d love to outsource the research to come up with a few examples of what’s on the market, then choose from those.). The idea is mine, the ultimate decision would be mine, but some of the busy work to get there was outsourced.

Last year I also wrote thank you letters to everyone on my team for Associate Appreciation Day with the help of an LLM. I’m obsessive about my writing, and I know if I’d done that activity from scratch, it would have easily taken me 4 hours. I cut it down to about 1.5hrs by starting with a prompt like, “Write an appreciation note in first person to an associate who…” then provided a bulleted list of accomplishments of theirs. It provided a first draft and I modified greatly from there, bouncing things off the LLM for support.

One associate was underperforming, and I had the LLM help me be “less effusive” and to “praise her effort” more than her results so I wasn’t sending a message that conflicted with her recent review. I would have spent hours finding the right ways of doing that on my own, but it got me there in a couple exchanges. It also helped me find synonyms.

In the end, the note was so heavily edited by me that it was in my voice. And as I said, it still took me ~1.5 hours to do for just the three people who reported to me at the time. So, like in the gift-giving example, the idea was mine, the choice was mine, but I outsourced some of the drafting and editing busy work.

IMO, LLMs are best when used to simplify or support you doing a task, not to replace you doing them.

This is exactly how I view LLMs and have used them before.

These people in these scenarios aren't going 'Amazon buy my gf a gift she likes.'

They're going, please write a letter to my professor thanking them for their help and all they've done for me in biology.

I don't know of anyone who trusts AI enough to just carte blanche fire off emails immediately after getting prompts back either.

The fear and cheapening of AI is the same fear and cheapening as every other advancement in technology.

It's not a a real conversation unless you talk face to face like a man

say it in a groupwrite it on parchment and inkpen and papertypewritertelegramphonecalltext messagefaxemail. E: rip strikethroughs?It's not a real paper if it's a meta analysis.

It's not it's not it's not.

All for arbitrary reasons that people have used to offset mundane garden levels of tedium or just outright ableist in some circumstances.

People also seriously overestimate their ability to detect AI writing or even pictures. That dude may very well have gotten a sincere letter without AI but they've already set it in their mind that the student wrote it with AI as if they know this student so well from 10 written assignments they probably don't care about to 1 potentially sincerely written statement to them.

If people like that think it cheapens the value, that's on them. People go on and on about removing pointless platitudes and dumb culturally ingrained shit but then clutch their pearls the moment one person toes outside the in-group.

It just feels so silly to me.

IT'S NOT ART UNLESS IT'S OIL ON CANVAS levels of dumb.

It's not altruistic/good-natured unless you don't benefit from it in any way and feel no emotion by doing it! You can't help the homeless unless you follow the rules! You can't give them money if you record it.

In the end, they still got that money. But somehow it devalues it because instead of raising two people up higher, you only raised one? It's foolishness.

People also seriously overestimate other's abilities and cheapen what their time is worth all the damn time.

That's not fan mail. That's spam.

"Hey Google, raise my children."

People have been trying that for a bit, it's not working too well

Sounds like Rimmer in Red Dwarf, who would then start trying to argue with Google as a whole or fix it.

I mean it kinda already is with all the parents putting kids in front of YouTube to watch Pregnant Spiderman breastfeed baby Elsa.

BNW

That's the perpetuum mobile of a certain kind of utopias.

Bolsheviks literally dreamed of "child combinates" (why would someone call it something like this, I dunno) where workers would offload their children to be cared for, while they themselves could work and enjoy their lives and such.

I'd say this tells enough about the kind of people these dreamers were and also that they didn't have any children of their own.

Though this is in the same row as the "glass of water" thing, which hints that there also weren't many women among them.

For some people utopia is a kind of Sparta with spaceships, where not only everything is common and there's no money, but also people own nothing, decide nothing, hold on to nothing, and children are collective property.

The people making these ads can't fathom anything past pure efficiency. It's what their entire job revolves around, efficiently using corporate resources to maximize the amount of people using or paying for a product.

Sure, I would like to be more efficient when writing, but that doesn't mean writing the whole letter for me, it means giving me pointers on how to start it, things to emphasize, or how to reword something that doesn't sound quite right, so I don't spend 10 minutes staring at an email wondering if the way I worded it will be taken the wrong way.

AI is a tool, it is not a replacement for humans. Trying to replace true human interaction with an LLM is like trying to replace an experienced person's job with a freshly hired intern with no experience. Sure, they can technically do the job, but they won't do it well. It's only a benefit when the intern works with the existing knowledgeable individuals in the field to do better work.

If we try to use AI to replace the entire process, we just end up with this:

That flowchart example is idiotic but I love it. The formal cover letter in between is more idiotic. It would be cool if we could collectively agree to just send "I'd like this job" instead of all the bullshit.

A lot of what we do as a society is redundant, but I do think fully written emails or cover letters have merit (even if it's the same template replicated for multiple applications,)

It helps the reviewer understand if you're articulate with your speech, gives them additional context to your resume, and lets them better match applicants with their current work environment.

That said, a lot of the process is still redundant anyways, and considering many hiring processes are now entirely automated, a more concise, standardized method of providing the same information would likely be more manageable and efficient for most people.

There's already too many applicants for every job opening. If you make the process even more automated public job listings/applicants will be sidelined entirely.

My team hired a jr dev a few months ago. The posting got several thousand responses on LinkedIn alone. We noped out of wading through all of those and just went the referral route.

But, you and everyone else would just say "I want this job" but they want the best person for the job. Putting up with bullshit is invariably going to be part of the job.

They can compare my resume with the other applicants'. I don't mind.

I said all these things to my partner when I saw the ad as well.

I've spent more time helping my kid write Steam reviews of the games they're playing than this Dad did on writing a letter to his daughter's hero.

Simple as. Don't be surprised when the kid puts you in a crappy home to afford more Gemini credit or whatever.

Well, some parents sincerely think they give more love by buying some new shiny thing, or, say, using an LLM to write a letter, than they do by just talking.

Imagine a man, autistic but in denial ("I'M NORMAL") with constant imitation who can't say a word without looking like a broken toy with clearly fake emotions and refusing to understand that this is not what one does when they show love. When said how that looks they just try harder at imitation or get furious. They don't understand that sincere emotions do not require effort. If you're autistic, yours look differently. But if you're autistic, but terribly afraid of being "not normal" (grown in ex-USSR backwater working-class environment), you won't accept the possibility and will just try harder to act. That'd be my dad (LLM's didn't exist back then, but).

It's tragic, not necessarily about putting less effort.

And this works for any pain people might try to cover with some technological perceived miracle. Which is why such things are poison which does get inhaled by some even now.

Once you realize that everyone that works in marketing is a soulless demon, the world starts to make a lot more sense.

Okay. I'm a transhumanist. I like AI, automation, and the abolishment of involuntary labor as well as obligatory adversity. Even I thought this ad was super fucking creepy. How the fuck do you justify sending your daughter an auto-generated letter? Now, not only do you not care enough to do it yourself, you're lying to her about it.

Other way around - the AI is writing a letter "from" the daughter to be sent to the athlete. Still BS though, and I'm sure famous people just love getting spam fan mail where the person couldn't be bothered to draft it themself.

I was remembering an ad that I saw yesterday(?), so either I mis-remembered, mis-understood, or mistook the ad mentioned in the article for the one I saw.

Regardless, ty for letting me know.

Imagine finding out that everything wise your parent had ever said to you was read verbatim from an AI tool.

It'd explain a lot, actually. 🤔

Yeah, since 2016 it's become apparent that my father never believed anything he said about morals when I was a kid. Just saying the words he thought he was supposed to say.

From my end, it's more to do with the fact that my parents' advice has been consistently bad and so have their life decisions.

If i look around me, the people have stopped caring and been lying about it for years.

Either Google knows it's audience, or the ad was sent to the wrong crowd.

And then he couldn't even bother to choose the words himself

It's not implying he can't be bothered, but that the machine can do a better job.

...which may be true, depending on just how bad he is at writing. Like, I was just watching this classic the other day. If this guy writes like some of those people, the machine may infact be better.

That said, for most people it's stupid, and the tech isn't able to do a better job at expressing such things.

Yet.

But doesn’t it matter that the machine isn’t expressing anything? It’s regurgitating words that are a facsimile of emotion. That matters to me. Especially in the long term. Since shorthand and texting became a thing, kids’ writing became way, way worse according to TAs and teachers I know. Which, that was a byproduct of a change in writing styles, so while kinda pathetic, it’s somewhat understandable. But this is just shoving itself between us and our own feelings. Say google gets their wish, everything we write to each other that ever matters more than a simple surface level conversation is expressed via LLMs. Where will that leave us? What does that leave us? We’re closing ourselves off from the world with technology. And we’re cheering for a new tech that will allow us to retreat even further away from human experience. That’s goddamn depressing if you ask me. And to answer my own question, it leaves us work, consumption, and fucking nothin.

This tech isn’t here to free us. From work, from tedium. It’s here to relegate us only to the tedium.

LLMs can also be helpful when your actual feelings should NOT be conveyed. For example, I can have a genuine response to someone along the lines of, "You are dumb for so many reasons, here are just a few of them that show you are out of touch with what our product can do, and frankly with reality itself. {Enumerated list with copious amounts of cursing and belittling}" Ok LLM, rewrite that message using professional office language because I emotionally refuse to.

Let's say that there is a single player MMO where all the other players are played by AI, but it is done so well that you can't really see the difference from real-human MMO players.

Would you play this? I would not. The fact that there is a human on the other side is important, even though it does not make any practical difference. Same with birthday wishes - that's way Facebook did not automate "Happy birthday!" even though it could.

Would you upload your personal data and voice to Open AI for it to make a a birthday wishes call to your mom? So convinient! She won't know the difference, and you get a 5 bulletpoint summary afterwards! Such a hellscape.

I want an MMO where 90+% of the "players", are AI.

5% of the players are idk, "cylons" or vampires, or "outlaws" or whatever, and they have to hide among the townspeople. They need to act like AI. They need to think like AI. But they have objectives to destroy the ship, or gather an army of vampire spawn, or rob the bank, or whatever. To do this, they need to look like AI. They need to act like AI. They need to think like AI.

5% of the players are the "heroes" or "main characters" or "vampire hunters" or whatever. They are outed but have bonus powers. They have to route out the vampires or cylons or outlaws; whatever.

Basically a giant online game of mafia. Give the baddies special powers, give the heroes special powers. Weapons, armors, disguises, leveling, etc.. etc.. basic game mechanics.

But ultimately its a giant game of mafia using the AI as fog of war.

I'd play the shit outta that

After suggesting it and thinking about this a bit further.

You could probably hack together a text version of this that works on lemmy.

Westworld vibes

There was an MMO that was single player, DotHack. It has its fans.

the game isn't tricking you though, and it's structured like a regular RPG or it would take 100 hours to get to the ending doing pointless grinding, but you get there just by following the plot.

That was more a MMO themed normal JRPG. It had a central plot focused on the main cast specifically that played out in the scenario of an MMO, with very scripted dialog and sequence of events.

Shit, online guides in MMOs are bad enough. "why aren't you following the meta" "you should be using this item and doing this build" These things basically make people bots. Having actual bots might be better.

Not only will people play it, they will play it in droves because at the end of the day, people are fluid, and fluid flows in predictable patterns.

You and I may be offended at the very idea of playing a game surrounded by fake people acting real, but for the average kid growing up in a world where reality is already a tenuous concept online, it will just be another strange experience in a growing list, and it might be really fun because of the things a game can do with complete control over the population of the "MMO."

Not in a million years. The next generation will though, they won't see any issue with it.

Unless something radically falls apart and makes people spurn electronic media entirely, some great Butlerian Jihad of the 21st century, we are going to see things get a LOT worse before they get better.

I guess they will anwser such calls with AI to get a summary anyway...

Great points overall. I guess previous generations thought that a hand-written letter cant be replaced by a digital one, yet here we are.

Animal crossing fans rise up

...and then imagine the AI not even pretending to be human, instakilling everyone in sight and outnumbering human players :/

It's like the South Park episode about using chatgpt to message their SO

Was kinda suprised I forgot about this one lol. Such a great episode.

https://youtube.com/shorts/QGKq8NHbPAY?si=NJ8cclJqblze11yX

Let's change the like button on youtube videos into an AI assistant that writes a three page email of thanks to the creator whenever it is pressed.

Let's burn down the Amazon to do it.

Pshh fellow comrades....

Then you haven't seen the movie theater ad they are showing where they ask the Genini AI to write a break up letter for them.

Anyone that does that, deserves to be alone for the rest of their days.

Ah, yes. I'm mostly on the receiving side of such and haven't had much luck in relationships, but getting ghosted after a few forced words, uneasy looks, maybe even kinda hurtedly-mocking remarks about my personality that I can't change is one thing, it's still human, though unjust, but OK.

While a generated letter with generated reasons and generated emotions feels, eh, just like something from the first girl I cared about, only her parents had amimia, so it wasn't completely her fault that all she said felt 90% fake (though it took me 10 years to accept that what she did actually was betrayal).

Reminds me of the movie Her, where all kinds of heartfelt letters were outsourced to professional agencies.

I agree. This ad was immediately disgusting, cringy, and deflated my already floundering hope for humanity. Google sucks.

Google is the yahoo of 2000

Thank you! The ads from everywhere this Olympics have been so fucking weird. I even started a thread on mastodon and this ad was on it. https://hachyderm.io/@ch00f/112861965493613935

Ever since I moved to an ad-reduced life, everything has been nicer. I can't completely escape them, they are everywhere. But minimizing with ublock and pihole helps, then only using video services that don't have ads. Unfortunately, a lot have added ads, so I have quit those. I'll pay extra for ad-free, just because ads make my life so miserable.

I can't watch broadcast TV, it's too irritating. I can't browse the web on a device outside my network or phone. I don't use free apps. Hell, I don't listen to the radio.

I like to think it has made me a calmer person.

They were always weird but it is getting to the point where even normies are taking notice.

All that sex traffic that occurs for their event alone make it an abomination.

My best friend, the Uber driver, which I prefer to shut up all the way home. But hey, what are friends for, he keeps me hydrated!

Is that a self-promotion?

The obvious missing element is another AI on Sydney’s end to summarize all the fan mail into a one-number sentiment score. At that point we can eliminate both the AIs and the mental effort, and just send each other single numbers via an ad-sponsored Google service.

Which they will unceremoniously murder after it fails to get enough traction in a month after launch.

Hey, my buddy's work is already doing that! Management no longer has any idea what the company does, but they know how often you click. It boils down to a decimal number, which is what they really need. Higher numbers are better.

This! I was appalled when this ad played, suggesting that ANYONE comes out of that fictional scenario pleased is ridiculous. No one wants to receive a crappy AI-written email, ESPECIALLY when the primary topic is emotional. Using an LLM to write a message for a loved one tells everyone that you don't actually care enough to write it yourself. And Google is putting their big check of approval on the whole scenario saying, "This is what we want you to use Gemini for." Absolutely abysmal.

The ONLY version of this ad that makes any sense is if the parent writing the email is illiterate or has a medical issue where they can't type. But I'd rather see them use AI to make dictation better and more powerful instead.

We're all switching to Kagi Search and moving our email to ProtonMail or the like right? I don't need this kind of crap in my digital tool kit.

Hate to say it, but Kagi is not great. Both in results and in stewardship.

Proton recently introduced an AI "writing assistant" for emails called Scribe and a bitcoin wallet sadly.

"This message really needs to be passionate and demonstrate my emotional investment, I'd better have a text generation algorithm do it for me"

"Hey Google, please write a letter from my family, addressed to me, that pretends that they love me deeply, and approve of me wholly, even though I am a soulless, emotionless ghoul that longs for the day we'll have truly functional AR glasses, so that I can superimpose stock tickers over the top of their worthless smiles."

"As a large language model, I'm not capable of providing a daydream representation of your most inner desires or fulfill your emotional requests. Please subscribe to have an opportunity to unlock these advanced features in one of our next beta releases."

Yeah, fully agree. This is one of the reasons big tech is dangerous with AI, their sense of humanity and their instincts on what's right are way off.

Oozes superficiality. Say anything do anything for market share.

It's 2027, the AI killer app never came, but LLMification has produced an unimaginable glut of mediocre media and the most popular AI application is to use it to find human sourced material.

The stock market is like a ship on fire, but you can buy video cards for pennies on the dollar.

And Google turned off display of dislikes. Although it was for Apple's ad.

The thing is, LLMs can be used for something like this, but just like if you asked a stranger to write a letter for your loved one and only gave them the vaguest amount of information about them or yourself you're going to end up with a really generic letter.

...but to give me amount of info and detail you would need to provide it with, you would probably end up already writing 3/4 of the letter yourself which defeats the purpose of being able to completely ignore and write off those you care about!

This ad is on purpose, to make us believe that using AI like this is the most normal thing. It's kind of brainwashing. So they can sell it to us.

https://www.youtube.com/watch?v=LCPhbN1l024 Futurama at it again.

God I hope that all of these bullshit AI platforms tank these giant awful tech companies.

When i saw this ad a few days ago, my immediate, audible, response was " I guess we don't need humans anymore. "

I think AI has already taken over and it's putting out the ads.

Okay, but what if I can't put my feelings into words? If even LLMs have a better grip on human emotion than me?

Edit: Well, then I wouldn't have a daughter in the first place; and I don't. Yay?

Read more. Then, write more.

I think my problem lies elsewhere, as in "what" to read and write. But it is generally good advise, thanks.

That's precisely my point: Reading more (specially fiction) gives you additional situations, context and ways to face different situations, as well as vocabulary, to help you express yourself when needed.

"Hey google, get me a dinner reservation at Dorsia"

Let's see Paul Allen's fan letter

It would've been cooler if they used it to write a cool PDF page of info and stats on Sydney McLaughlin-Levrone

Or finding/buying plane tickets at the best price by searching all the sites

But that would imply that it can be relied upon for accuracy.

I think AI is great, but not for this. It's much better suited for, say, stuff like AI dungeon, or other entertainment (DougDoug on twitch/YouTube is the perfect example).

New movie just dropped Violet evergarden: Gemini's dream

Idk, I mean I think this is more honest and practical LLM advertising than what we've seen before

I like to say AI is good at what I'm bad at. I'm bad at writing emails, putting my emotions out there (unless I'm sleep deprived up to the point I'm past self consciousness), and advocating for my work. LLMs do what takes me hours in a few seconds, even running locally on my modest hardware.

AI will not replace workers without significant qualitative advancements... It can sure as hell smooth the edges in my own life

You think AI is better than you at putting your emotions out there????

Talking to a rubber duck or writing to a person who isn't there is an effective way to process your own thoughts and emotions

Talking to a rubber duck that can rephrase your words and occasionally offer suggestions is basically what therapy is. It absolutely can help me process my emotions and put them into words, or encourage me to put myself out there

That's the problem with how people look at AI. It's not a replacement for anything, it's a tool that can do things that only a human could do before now. It doesn't need to be right all the time, because it's not thinking or feeling for me. It's a tool that improves my ability to think and feel

well I am pretty sure Psychologists and Psychiatrists out there would be too polite to laugh at this nonsense.

Precisely, you are giving it a TON more credit than it deserves

At this point, I am kind of concerned for you. You should try real therapy and see the difference

Psychiatrists don't generally do therapy, and therapists don't give diagnoses or medication

Therapy is a bunch of techniques to get people talking, repeating their words back to them, and occasionally offering compensation methods or suggesting possible motivations of others. Telling you what to think or feel is unethical - therapy is about gently leading you to the realizations yourself. They can also provide accountability and advice, but they don't diagnose or hand you the answer - people circle around their issues and struggle to see it, but they need to make the connections themselves

I don't give AI too much credit - I give myself credit. I don't lie to myself, and I don't have trouble talking about what's bothering me. I use AI as a tool - these kinds of conversations are a mirror I can use to better understand myself. I'm the one in control, but through an external agent. I guide the AI to guide myself

An AI is not a replacement for a therapist, but it can be an effective tool for self reflection

I get what they mean. It can help you articulate what you're feeling. It can be very hard to find the right words a lot of the time.

If you're using it as a template and then making it your own then what's the harm?

It's the equivalent of buying a card, not bothering writing anything on it and just signing your name before mailing it out. The entire point of a fan letter (in this case) is the personal touch, if you are just going to take a template and send it, you are basically sending spam.

I am 100% for this if it's yet another busywork communication in the office; but personal stuff should remain personal.

This is the same reason people think giving cash as a valentine's gift is unacceptable LOL

Yeah I agree if you send it without doing any kind of personalisation. I think LLM shine as a template or starting point for various things. From there it's up to the user to actually make it theirs.

exactly... AI used as a template factory would be good use.

The problem here (with the commercial in question) is that they present it as Gemini being able to write a proper fan letter that is not even prompted by the fan (it's the dad for some reason)... THAT is what makes it incredibly cringey

And to state the obvious; of course it would be helpful for anyone with a learning or speech disability, nobody in their right mind would complain about a "wheelchair doing all the work" for a person who cannot walk.

That can be, in many cases, because you don't read enough to have learned the proper words to express yourself. Maybe you're even convinced that reading isn't worth it.

If this is the case, you don't have anything worth saying. Better stay silent.

I think it can be, if you know how to use it

It literally cannot since it has zero insight to your feelings. You are just choosing pretty words you think sound good.

The future will be bots sending letters to bots and telling the few remaining humans left how to feel about them.

The old people saying we have lost our humanity will be absolutely right for once.

The choices you make have to be based on some kind of logic and inputs with corresponding outputs though, especially on a computer.

Sure, the ones I make... the ones the "AI" makes are literally based on statistical correlation to choices millions of other people have made

My prompt to AI (i.e. write a letter saying how much I love Justin Bieber) is actually less personal input, and value, than just writing "you rock" on a piece of paper... no matter what AI spews.

This would be OK for busywork in the office. The complaint here is not that AI is an OK provider of templates, the issue is that it pretends an AI generated fan mail, prompted by the father of the fan (not even the fan themselves) is actually of MORE value than anything the daughter could have put together herself.

Yes, but this is also its own special kind of logic. It's a statistical distribution.

You can define whatever statistical distribution you want and do whatever calculations you want with it.

The computer can take your inputs, do a bunch of stats calculations internally, then return a bunch of related outputs.

Yes, I know how it works in general.

The point remains that, someone else prompting AI to say "write a fan letter for my daughter" has close to zero chance to represent the daughter who is not even in the conversation.

Even in general terms, if I ask AI to write a letter for me, it will do so based 99.999999999999999% on whatever it was trained on, NOT me. I can then push more and more prompts to "personalize" it, but at that point you are basically dictating the letter and just letting AI do grammar and spelling

Again, you completely made up that number.

I think you should look up statistical probability tests for the means of normal distributions, at least if you want a stronger argument.

Of course I made it up... the point is that AI trains on LOADS of data and the chances that this data truly represents your own feelings towards a celebrity are slim...

I despise the Kardashians yet if I ask AI to write them a fan letter, it would give me something akin to whatever the people who like them may say. AI has no concept of what or how I like anything, it cannot since it is not me and has no way to even understand what it is saying

Yes, because more people with positive things to say about stuff like money and fame influenced the inputs.

Precisely... other people, not me

So how can you say anything written by AI represents YOU?

I'd view it as an opportunity for AI to provide guidance like "how can I express this effectively", rather than just an AI doing it instead of you in an "AI write this" way.

That's true too, it can give you examples to get you started, although it can be pretty hit or miss for that. Most models tend to be very clinical and conservative when it comes to mental health and relationships

I like to use it to actively listen and help me arrange my thoughts, and encourage me to go through with things. Occasionally it surprises me with solid advice, but mostly it's helpful to put things into words, have them read back to you, and deciding if that sounds true

Dear Sydney...

https://www.youtube.com/watch?v=wfEEAfjb8Ko

Now we need the machine to write a handwritten letter, and sign it. To complete the effect of genuine human connection

This and the Nike ad have been the worst ads during the Olympics.

I saw a movie the other day, and all of the ads before the previews were about AI. It was awful, and I hated it. One of them was this one, and yes... Terrible.

I saw a similar ad in theaters this week, it started by asking Gemini to write a breakup letter and I thought my friend next to me was going to cry because she's going through a breakup but then right at the end it goes "...to my old phone, because the Pixel 9 is just so cool!"

Gemini is awesome, I use it all the time for applied algebra and coding but using it to replace human emotions is not awesome. Google can do better

I've been watching quite a lot of Olympics coverage on TV, but never seen any ads. Is there an official Olympics TV channel with these ads?

Being a non native English speaker this is actually one of the better uses of LLMs for me. When I need to write in "fancier" English I ask LLMs and use it as an initial point (sometimes end up doing heavy modifications sometimes light). I mean this is one of the more logical uses of LLM, it is good at languages (unlike trying to get it to solve math problems).

And I dont agree with the pov that just because you use LLM output to find a good starting point it stops being personal.

Well, if you get anywhere with that fake facade, then it will catch up to you.

Better start reading nicely written English books while doing this...

I have been reading English books of all kinds for the better part of the last 30 years. Understanding languages is fine but utilising in an impressive and complex way simply does not come very easily to me.

Learning to use the tools available to you is not "fake" it's being smart. Anyone who would be like "oh you recognize your weak point and have found and used a tool effectively to minimize it...you're fired/get out of my life" is an asshole and an idiot.

If you use binggpt as a translator tool, and put a disclaimer that these are not your own words - kudos, you removed the need for a translator and the latency associated.

However, if you claim that you speak English and use this tool to create a false impression of proficiency, that is just usual lying.

Furthermore, lacking proficiency in any language and using a tool to "beautify" a paragraph in said language will generally fail to improve communication, because chatgpt is trying to infer and add information which just isnt there (details, connotations, phraseologisms). Will just add more garbage to the conversation, and most likely words and meanings that just arent yours.

It's fine. Eventually when people start using this crap en masse the people on the other end will just be using LLMs to distill the bullshit down to 3 key points anyway.

That would be bizarre, lol

Let’s say one person writes 3 pages with some key points, then another extracts modified points due to added llm garbage then sends them again in 2 page essay to someone else and they again extract modified points. Original message was long gone and failure to communicate occurred but bots talk to each other so to say further producing even more garbage

In the end we are drowning in humongous pile of generated garbage and no one can effectively communicate anymore

The funny thing is this is mostly true without LLMs or other bots. People and institutions cant communicate because of leviathan amounts of legalese, say-literally-nothing-but-hide-it-in-a-mountain-of-bullshitese, barely-a-correlation-but-inflate-it-to-be-groundbreaking-ese, literally-lie-but-its-too-complicatedly-phrased-nobody-can-call-false-advertising-ese.

What about using an LLM to extract actual EULA key points?

I wouldn’t rely on LLM to read anything for you that matters. Maybe it will do ok nine out of ten times but when it fails you won’t even know until it is too late.

What if Eula itself was chat gpt generated from another chat generated output from another etc.. madness. Such Eula will be pure garbage suddenly and cutting costs no one will even notice relying on ai so much until it’s all fubar

So sure it will initially seem like a helpful tool, make key points from this text that was generated by someone from some other key points extracted by gpt but the mistakes will multiply in each iteration.

everyone assumes I am talking about taking something chatgpt spews out and using it as it is whereas only the thing I said was to use it as an initial starting point i.e overcoming the blank slate block. When everyone is so horrible in understanding what other people try to convey I assume you wouldn't lose much if you used chatgpt as it is anyways lol.

I see your point and can agree in the cases where the tool won't be available to you, or if there is an intent to deceive.

But to flip the script, I'm pretty good at spelling but even then there are words I fuck up the spelling and it's caught by a spell checker. Am I a liar for submitting things without pointing out my spelling errors that a computer caught? Or is there a recognition that this is a common tool available and I've effectively used it to improve my communication, so this is just standard practice?

I would accept spell checker, for a few reasons: one - it doesn't really change the meanings, or the words, just polishes tiny fails; two - English is an abysmal language which has the largest percentage of dyslexic people of any language, and it's associated with the fact that the dictionary is a mix of words from many languages, and neither they adhere to some single rule of spelling, or nor to 5 of them...

The problem with this is that effectively you aren’t speaking anymore, the bot does for you. And if on the other side someone does not read anymore (the bot does it for them) then we are in very bizarre situation where all sorts of crazy shit starts to happen that never did.

You will ‚say’ something you didn’t mean at all, they will ‚read’ something that wasn’t there. The very language, communication collapses.

If everyone relies on it this will lead to total paralysis of society because the tool is flawed but in such a way that is not immediately apparent until it is everywhere, processes its own output and chokes on the garbage it produces.

It wouldn’t be so bad if it was immediately apparent but it seems so helpful and nice what can go wrong

Desperation...

Meh. How many people used to copy "meaningful" mother's day cards, birthdays cards, wedding vows, speeches and whatnot from others. That was even a thing well before the internet itself.

Using LLMs for things people aren't passionate about and/or lack the experience of finding the right words is a great use case.